turtlebot移动机器人(07):键盘和手柄操纵

Indigo introduces new ways to interact with your turtlebot, 就是 qt and android remocons.

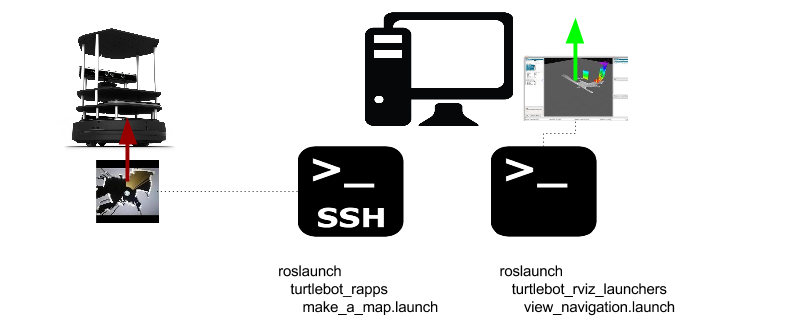

1. 早期的,RosLaunch+SSH

In previous ros releases, task launch/tear down operations have primarily been managed via ssh, rosrun and roslaunch. This is still possible in indigo and in this way it is possible to launch a robot task in an ssh terminal, e.g. make a map, and in another terminal, launch the rviz monitoring program on the pc side.

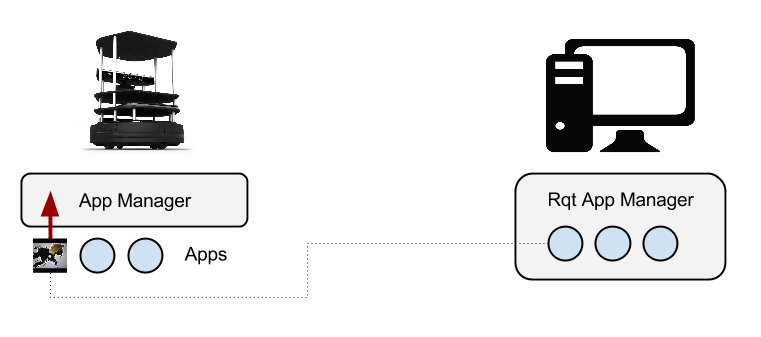

2. 传统的, Rapp

Indigo officially introduces a couple of news ways for both developers and users to interact with your robot whilst at the same time keeping the old ‘ssh-roslaunch’ style available.

Aside from being an easy way to see what is available to run, it also ensures that maintenance of the running task is done on the robot – you do not need to maintain a ssh connection to the robot over the wireless, nor does the rqt_app_manager need to be continually running.

BUT it is however, limited to one-sided interactions (i.e. won’t manage the rviz launch on the pc side).

编程示例 .

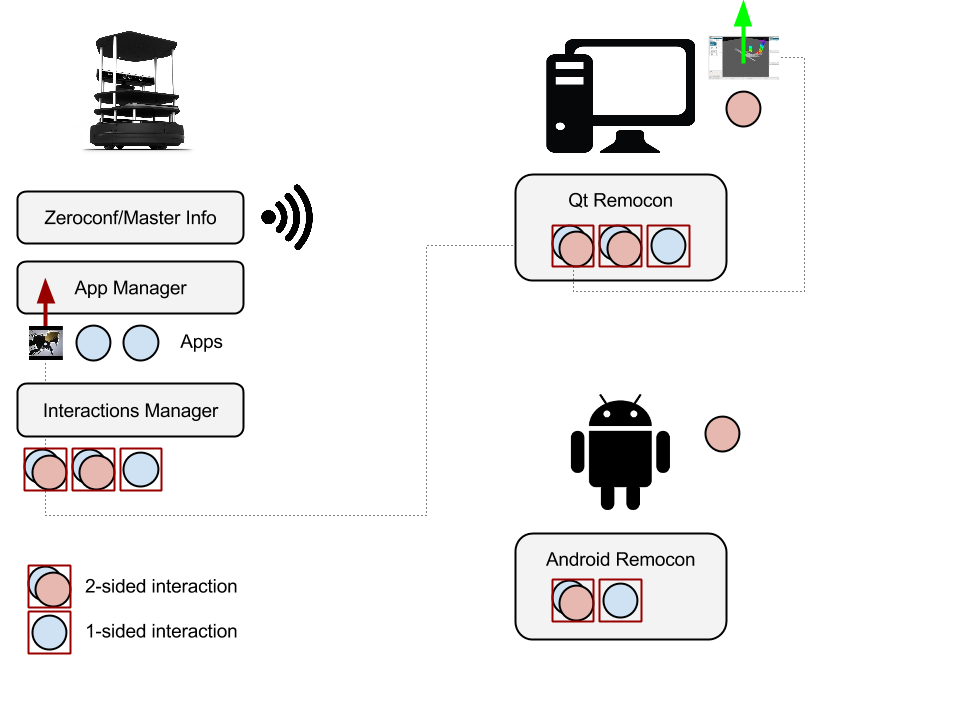

3. 新版的, Interactions

Interactions extend the application management to two sided interactions as well as providing the ability to interact from devices that do not include your pc, e.g. android phones and tablets. The available interactions, the devices they are permitted to run on and synchronisation of launch/tear down of two sided interactions is maintained by the interactions manager which sits between the application manager and the remocons.

3.1 pc

编程示例1 .

编程示例2 .

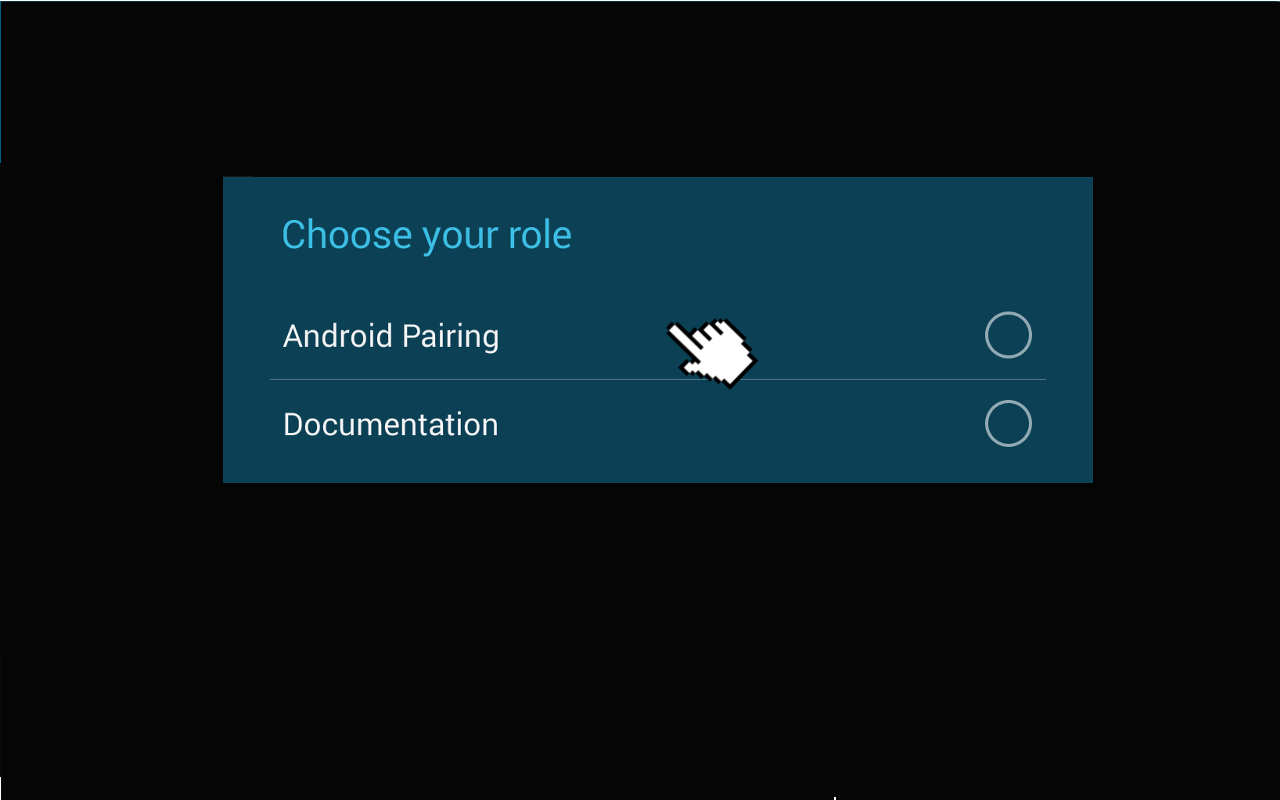

3.2 android

* Download for App Store

* How to run

* How ROS to do Android Dev Tutorials

A development of turtlebot android application is similar to Ros android application.

It is important to note that OS the MasterChooser needs to be declared in the application’s manifest to avoid a crash. This can be done by adding the following line inside the tag.

4. keyboad Teleoperation

On the turtulebot PC run the following command:

$ roslaunch turtlebot_bringup minimal.launch

then

$ roslaunch turtlebot_teleop keyboard_teleop.launch

code is /opt/ros/indigo/share/turtlebot_teleop , keyboad_teleop.cpp and keyboad_teleop.py

script of the keyboard_teleop.launch file runs the ROS node turtlebot_teleop_key of the package turtlebot_teleop.

the keyboard teleop node takes two parameters(scale_linear and scale_angular) and they are used to determine the scale of the linear and angular speed of the turtlebot.

The command remap the topic of the velocity command to cmd_vel_mux/input/teleop, which is the topic that the turtlebot uses to receive velocity commands.

In fact, in the turtlebot_teleop_key the programmers used the topic turtlebot_teleop_keyboard/cmd_vel to publish velocity commands, but the turtlebot uses the cmd_vel_mux/input/teleop topic to receive velocity commands.

The remap command allows to change the definition of the turtlebot_teleop_keyboard/cmd_vel to the topic defined in the turtlebot robot.

Without the remap the keyboard teleopartion will not work as the topic used for publishing velocity commands will be different from the one used to receive them in the robot.

5. Joystick Teleoperation

例如Logitech的F710手柄,要选择direct 模式,也就是手柄前侧x/d切换到D按钮那里,操作时候保持左前侧两个手柄同时按下,然后才可以前后左右键控制。

更改js0的root群组为dialout群组:

$ sudo chgrp dialout /dev/input/js0

Let’s make the joystick accessible for the ROS joy node:

$ sudo chmod a+rw /dev/input/js0, should be:

$ crw-rw-rw- 1 root dialout 188, 0 2009-08-14 12:04 /dev/input/js0

On the turtulebot PC run the following command:

$ roslaunch turtlebot_teleop logitech.launch –screen

需要控制时候,按下左侧D按钮的同时,用左侧的四向按钮控制前后左右即可。

We can edit joystick_teleop.launch file:

<param name=”scale_linear” value=”0.5″ />

to reduce the 0.5 to slow the turtlebot’s top speed.

6. Android Apps Teleoperation

7. Voice Teleoperation

Go to your catkin workspace and then go to ~/catkin_ws/src/

Create new a ROS package called gaitech_edu (or choose any other name) which depends on pocketsphinx, roscpp, rospy, sound_play and std_msgs as follow:

$ catkin_create_pkg gaitech_edu roscpp rospy pocketsphinx sound_play std_msgs

In the ~/catkin_ws/src/, write the following command to see all the files and folders created:

$ tree gaitech_edu

open your package.xml file and add all the required dependencies

gaitech_edu

0.0.1

gaitech_edu

ros TODO catkin

pocketsphinx

roscpp

rospy

sound_play

std_msgs

pocketsphinx

roscpp

rospy

sound_play

std_msgs Now, compile your newly added package:

$ cd ~/catkin_ws

$ catkin_make

5.1 PocketSphinx package for Speech Recognition

install the gstreamer0.10-pocketsphinx and the ROS sound drivers.

$ sudo apt-get install gstreamer0.10-pocketsphinx

$ sudo apt-get install ros-indigo-pocketsphinx

$ sudo apt-get install ros-indigo-audio-common

$ sudo apt-get install libasound2

5.2 Test the PocketSphinx Recognizer

that pocketsphinx comes as a packages in ROS Indigo. In the directory demo/, you can observe the voice commands and dictionary.

run the following command:

$ roslaunch pocketsphinx robocup.launch

This command will run the recognizer.py script with loading the dictionary robocup.dic. Now, you can start saying some words.

——

INFO: ngram_search_fwdtree.c(186): Creating search tree

INFO: ngram_search_fwdtree.c(191): before: 0 root, 0 non-root channels, 12 single-phone words

INFO: ngram_search_fwdtree.c(326): after: max nonroot chan increased to 328

INFO: ngram_search_fwdtree.c(338): after: 77 root, 200 non-root channels, 11 single-phone words

5.3 Driver the robot

If the recognizer successfuly detected your spoken word, you can move to the next step to talk to your robot.

The spoken words found by the recognizer will be published to the topic /recognizer/output.

$ rostopic echo /recognizer/output

——-

data: hello

5.4 Create Your Vocabulary of Commands

Create a folder and call it config and inside this folder create a txt file called motion_commands.txt and put the following commands (which you can extend later) for the robot motion:

$ vi motion_commands.txt

move forward

move backwards

turn right

turn left

You can then update the code to add more commands, such as faster, to increase the speed of the robot, slower, to decrease the speed of the robot. The file voice_teleop_advanced.py contains a more elaborated example with more commands.

After editing the motion_commands.txt file, you have to compile it into special dictionary and pronounciation files so it matches the specification for the PocketSphinx.

The online CMU language model (lm) tool is very useful in this case, visit their website and upload your file. Click on the Compile Knowledge Base button, then download the file labeled COMPRESSED TARBALL that contains all the language model files that you need and the PocketSphinx can understand. Extract these files into the config subdirectory of the gaitech_edu package (or your package where you are working this example). These files must be provided as an input parameter to recognizer.py node. To do so, you need to create a launch file as follow.

First, create a folder and call it launch where to create launch files

Then, create a file called recognizer.launch, and add the following XML code:

This file runs the recognizer.py node from the pocketsphinx package ,Launch the recognizer.launch file:

$ roslaunch gaitech_edu recognizer.launch

$ rostopic echo /recognizer/output

5.4 scripts

In this section, we will present a small program src/turtlebot/voice_teleop/voice_teleop.py (voice_teleop.cpp for C++) that will allow you to control your turtlebot robot using voice commands.

The idea is simple. The program will subscribe to the topic /recognizer/output, which is published by the node recognizer.py node of the pocketsphinx package using the dictionary of words that we create above. Once a command is received, the callback function of the subscribed topic /recognizer/output will be executed to set the velocity of the robot based on the command received.

Edit voice_teleop.py file in src/turtlebot/voice_teleop/ directory:

#!/usr/bin/env python

import rospy

from geometry_msgs.msg import Twist

from std_msgs.msg import String

class RobotVoiceTeleop:

#define the constructor of the class

def __init__(self):

#initialize the ROS node with a name voice_teleop

rospy.init_node(‘voice_teleop’)

# Publish the Twist message to the cmd_vel topic

self.cmd_vel_pub = rospy.Publisher(‘/cmd_vel’, Twist, queue_size=5)

# Subscribe to the /recognizer/output topic to receive voice commands.

rospy.Subscriber(‘/recognizer/output’, String, self.voice_command_callback)

#create a Rate object to sleep the process at 5 Hz

rate = rospy.Rate(5)

# Initialize the Twist message we will publish.

self.cmd_vel = Twist()

#make sure to make the robot stop by default

self.cmd_vel.linear.x=0;

self.cmd_vel.angular.z=0;

# A mapping from keywords or phrases to commands

#we consider the following simple commands, which you can extend on your own

self.commands = [‘stop’,

‘forward’,

‘backward’,

‘turn left’,

‘turn right’,

]

rospy.loginfo(“Ready to receive voice commands”)

# We have to keep publishing the cmd_vel message if we want the robot to keep moving.

while not rospy.is_shutdown():

self.cmd_vel_pub.publish(self.cmd_vel)

rate.sleep()

def voice_command_callback(self, msg):

# Get the motion command from the recognized phrase

command = msg.data

if (command in self.commands):

if command == ‘forward’:

self.cmd_vel.linear.x = 0.2

self.cmd_vel.angular.z = 0.0

elif command == ‘backward’:

self.cmd_vel.linear.x = -0.2

self.cmd_vel.angular.z = 0.0

elif command == ‘turn left’:

self.cmd_vel.linear.x = 0.0

self.cmd_vel.angular.z = 0.5

elif command == ‘turn right’:

self.cmd_vel.linear.x = 0.0

self.cmd_vel.angular.z = -0.5

elif command == ‘stop’:

self.cmd_vel.linear.x = 0.0

self.cmd_vel.angular.z = 0.0

else: #command not found

#print ‘command not found: ‘+command

self.cmd_vel.linear.x = 0.0

self.cmd_vel.angular.z = 0.0

print (“linear speed : ” + str(self.cmd_vel.linear.x))

print (“angular speed: ” + str(self.cmd_vel.angular.z))

if __name__==”__main__”:

try:

RobotVoiceTeleop()

rospy.spin()

except rospy.ROSInterruptException:

rospy.loginfo(“Voice navigation terminated.”)

create the following launch file called turtlebot_voice_teleop_stage.launch that will run the recognizer.py node, voice_teleop.py node and turtlebot_Gazebo simulator.

It is important to note the topic remapping instruction in the launch file for the voice_teleop.py node. In fact, the voice_teleop.py node publishes velocities to the topic /cmd_vel, whereas the turtlebot_stage simulator wait for velocities on the topic /cmd_vel_mux/input/teleop.

launch the launch file specified above as follows:

$ roslaunch gaitech_edu turtlebot_voice_teleop_stage.launch

5.5 with a Real Turtlebot Robot

run the following commands:

$ roslaunch turtlebot_bringup minimal.launch

9 – make some ready for movement test:

$ mkdir ~/helloworld

$ cd ~/helloworld/

$ git clone https://github.com/markwsilliman/turtlebot/

$ cd turtlebot

for example:

$ python goforward.py

发表评论

Want to join the discussion?Feel free to contribute!