TK1之lenet -11

基于caffe的prototxt和solver结构,

采用mnist的训练图片和测试图片,

创建神经网络的结构和配置,

实现手写数字识别。

2 IPython – qtconsole – Notebook

2.1 IPython

Python的验证调试pdb在其ide里面是功能微弱的, 为此有IPython这一利器:

自动补全功能,使用tab键,如输入im后按tab键,自动补全import。

$ sudo apt-get install ipython

$ sudo apt-get install python-zmq

$ ipython

即可进入互式IDE编程环境。

可以使用使用魔法指令% :

%run test.py 直接运行python脚本

%pwd: 显示当前工作目录。

%cd: 切换工作目录。

后面的qt和book均使用了matplotlib这个著名的Python图表绘制扩展库,

支持输出多格式图像,可以使用多种GUI界面库交互式地显示图表。

这里用它的inline这个嵌入参数把matplotlib图表的显示在qtconsole或者notebook里面, 而不是单独弹出一个窗口。

2.2 qtconsole

IPython团队开发了一个基于Qt框架,目的是为终端应用程序提供诸如内嵌图片、多行编辑、语法高亮之类的富文本编辑功能的GUI控制台。

好处是并不出现shell控制台在背后运行,只有ipython的qt控制台运行。

$ sudo apt-get install ipython-qtconsole

$ ipython qtconsole –pylab=inline

2.3 Notebook

或者可以使用nootebook:

$ sudo apt-get install ipython-notebook

$ ipython notebook –pylab=inline

出现界面后,可以输入多行代码,shift+Enter运行即可。

Shift-Enter : run cell

Ctrl-Enter : run cell in-place

Alt-Enter : run cell, insert below

2.4 说明

iPython调试, 虽然可以代码块,方便的很;

但是如果出错也是很间接, kenel restarting之类的,都看不清

很多时候, 在python的一行行pdb调试,有时还是不可替代的。

后面的调试里, 从哪里启动python特别重要:

$ cd …CAFE_ROOT…

$ ipython or python … …

否则,不然又很多路经问题出错。

如果:

db_lmdb.hpp:14] Check failed: mdb_status == 0 (2 vs. 0) No such file or directory

这个是train和test的prototxt里面的data source 位置不对。

如果:

caffe solver.cpp:442] Cannot write to snapshot prefix ‘examples/mnist/lenet’.

这个可以是路径也可以是把lenet改成任一个zenet

如果:

ipython “Kernel Restarting” about solver = caffe.SGDSolver(‘mnist/lenet_auto_solver.prototxt’)

可能要在solver里面最后显示加上:

solver_type: GPU

3 设置prototxt

3.0

$ cd /home/ubuntu/sdcard/caffe-for-cudnn-v2.5.48

$ ipython qtconsole –pylab=inline

3.1 配置Python环境

# we’ll use the pylab import for numpy and plot inline

from pylab import *

%matplotlib inline

# Import caffe, adding it to sys.path if needed. (Make sure’ve built pycaffe)

# caffe_root = ‘../’ # It means this bash file should be run from {caffe_root}/examples/

import sys

caffe_root = ‘.’

sys.path.insert(0, caffe_root + ‘python’)

import caffe

3.2 挂于数据

可选动作, 如果ok不需要。

# Using LeNet example data and networks, if already downloaded skip this step.

## run scripts from caffe root

## import os

## os.chdir(caffe_root)

## Download data

## !data/mnist/get_mnist.sh

## Prepare data

## !examples/mnist/create_mnist.sh

## back to examples

## os.chdir(‘examples’)

3.3 关于网络

可选动作,如果ok不需要。

这个train和test网络文件是caffe安装时候提供了的,直接用即可。

也自己生成, 以下是建一个Leet的变体。

# We’ll write the net in a succinct and natural way as Python code that serializes to Caffe’s protobuf model format.

# This network expects to read from pre-generated LMDBs,

# but reading directly from ndarrays is also possible using MemoryDataLayer.

# We’ll need two external files:

# the net prototxt, defining the architecture and pointing to the train/test data

# the solver prototxt, defining the learning parameters

from caffe import layers as L, params as P

def lenet(db_path,batch_size):

n=caffe.NetSpec()

n.data,n.label=L.Data(batch_size=batch_size,backend=P.Data.LMDB,source=db_path, transform_param=dict(scale=1./255),ntop=2)

n.conv1=L.Convolution(n.data,kernel_size=5,num_output=20,weight_filler=dict(type=’xavier’))

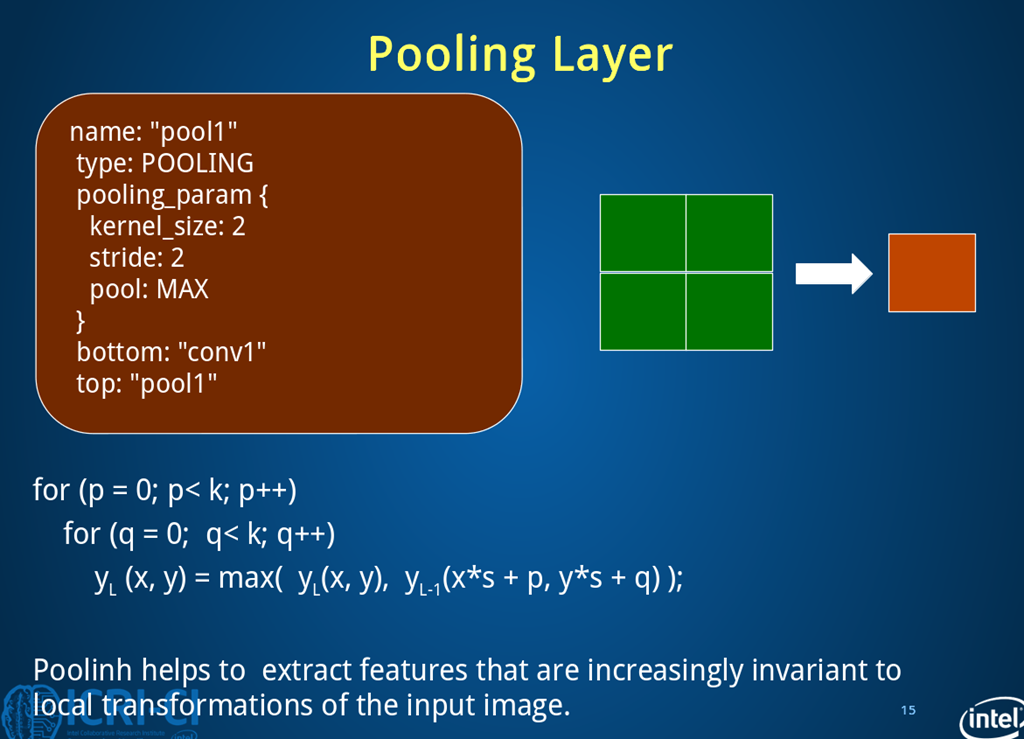

n.pool1=L.Pooling(n.conv1,kernel_size=2,stride=2,pool=P.Pooling.MAX)

n.conv2=L.Convolution(n.pool1,kernel_size=5,num_output=50,weight_filler=dict(type=’xavier’))

n.pool2=L.Pooling(n.conv2,kernel_size=2,stride=2,pool=P.Pooling.MAX)

n.fc1 =L.InnerProduct(n.pool2,num_output=500,weight_filler=dict(type=’xavier’))

n.relu1=L.ReLU(n.fc1,in_place=True)

n.score=L.InnerProduct(n.relu1,num_output=10,weight_filler=dict(type=’xavier’))

n.loss=L.SoftmaxWithLoss(n.score,n.label)

return n.to_proto()

# write net to disk in a human-readable serialization using Google’s protobuf

# You can read, write, and modify this description directly

with open(‘examples/mnist/lenet_auto_train.prototxt’, ‘w’) as f: # this is train

f.write(str(lenet(‘examples/mnist/mnist_train_lmdb’, 64)))

with open(‘examples/mnist/lenet_auto_test.prototxt’, ‘w’) as f: # this is solver

f.write(str(lenet(‘examples/mnist/mnist_test_lmdb’, 100)))

这样train和test网络结构写入介质

名字可以自定义,但是要符合solver.prototxt里面的配置。

# you can view tranin net struct geneted pre-step

# $ vi examples/mnist/lenet_auto_train.prototxt

# you can view test net struct geneted pre-step

# $ vi examples/mnist/lenet_auto_test.prototxt

3.4 关于求解器

可选动作,如果ok不需要。

这个lenet_auto_solver.prototxt文件是caffe安装时候提供了的,

直接用即可。

也自己生成这个solver.prototxt.

from caffe.proto import caffe_pd2

s=caffe_pb2.SolverParameter()

s.random_seed=0

#下面格式参数与之前看到的相似

… …

#最后

with open(yourpath,‘w‘)as f:

f.write(str(s))

然后与上面类似

solver=None

solver=caffe.get_solver(yourpath)

这个solver文件大概像这样:

# $ vi examples/mnist/lenet_auto_solver.prototxt

# The train/test net protocol buffer definition

train_net: “examples/mnist/lenet_auto_train.prototxt”

test_net: “examples/mnist/lenet_auto_test.prototxt”

# test_iter specifies how many forward passes the test should carry out.

# In the case of MNIST, we have test batch size 100 and 100 test iterations,

# covering the full 10,000 testing images.

test_iter: 100

# Carry out testing every 500 training iterations.

test_interval: 500

# The base learning rate, momentum and the weight decay of the network.

base_lr: 0.01

momentum: 0.9

weight_decay: 0.0005

# The learning rate policy

lr_policy: “inv”

gamma: 0.0001

power: 0.75

# Display every 100 iterations

display: 100

# The maximum number of iterations

max_iter: 10000

# snapshot intermediate results

snapshot: 5000

snapshot_prefix: “mnist/lenet”

3.5 加载求解器

# Let’s pick a device and load the solver

caffe.set_device(0)

caffe.set_mode_gpu() #using GPU

# load the solver

solver=None

# create train and test nets

# We’ll use SGD (with momentum), but other methods are also available.

solver = caffe.SGDSolver(‘examples/mnist/lenet_auto_solver.prototxt’)

说明:这个caffe的优化函数是非凸的,没有解析解,需要通过优化方法来求解。

3.6 检查网络

检查输出shape | 查看中间特征(blobs) | 参数(params)的维数

# each output is (batch size, feature dim, spatial dim)

中间特征(blobs)

[(k, v.data.shape) for k, v in solver.net.blobs.items()]

… …

[(‘data‘, (64, 1, 28, 28)),

(‘label‘, (64,)),

(‘conv1‘, (64, 20, 24, 24)),

(‘pool1‘, (64, 20, 12, 12)),

(‘conv2‘, (64, 50, 8, 8)),

(‘pool2‘, (64, 50, 4, 4)),

(‘fc1‘, (64, 500)),

(‘score‘, (64, 10)),

(‘loss‘, ())]

参数(params)

# just print the weight sizes (we’ll omit the biases)

[(k, v[0].data.shape) for k, v in solver.net.params.items()]

… …

[(‘conv1‘, (20, 1, 5, 5)),

(‘conv2‘, (50, 20, 5, 5)),

(‘fc1‘, (500, 800)),

(‘score‘, (10, 500))]

3.7 执行一次

这个在qtconsole会crash, 在notebook不会, python更不会。

在测试集和训练集上执行一个前向的过程

# check that everything is loaded as we expect,

# by running a forward pass on the train and test nets,

# and check that they contain our data.

# step does one full iteration, covering all three phases: forward evaluation, backward propagation, and update.

# forward does only the first of these.

# Step does only one iteration: a single batch of 100 images. If doing a full set of 20 batches (all 2000 inputs) is called an epoch

训练集

solver.net.forward() # train net

… …

{‘loss’: array(2.363983154296875, dtype=float32)}

测试集

solver.test_nets[0].forward() # test net (there can be more than one)

… …

{‘loss’: array(2.365971088409424, dtype=float32)}

显示训练集 8个数据的图像和他们的标签

imshow(solver.net.blobs[‘data’].data[:8,0].transpose(1,0,2).reshape(28,8*28),cmap=”gray”);axis(‘off’)

print ‘wewewerth’,solver.net.blobs[‘label’].data[:8]

… …

wewewerth [ 5. 0. 4. 1. 9. 2. 1. 3.]

显示测试集中的8个图像和他们的标签

imshow(solver.test_nets[0].blobs[‘data’].data[:8, 0].transpose(1, 0, 2).reshape(28, 8*28), cmap=’gray’)

print solver.test_nets[0].blobs[‘label’].data[:8]

… …

labels [ 7. 2. 1. 0. 4. 1. 4. 9.]

3.8 运行网络

前述确定载入了正确的数据及标签,开始运行solver,运行一个batch看是否有梯度变化。

Both train and test nets seem to be loading data, and to have correct labels.

执行一步SGB, 查看权值的变化

# Let’s take one step of (minibatch) SGD and see what happens.

solver.step(1)

第一层权值的变化如下图:20个5×5规模的滤波器

# Do we have gradients propagating through our filters?

# Let’s see the updates to the first layer, shown here as a 4*5 grid of 5*5 filters.

imshow(solver.net.params[‘conv1′][0].diff[:,0].reshape(4,5,5,5).transpose(0,2,1,3).reshape(4*5,5*5),cmap=’gray’);axis(‘off’)

… …

(-0.5, 24.5, 19.5, -0.5)

运行网络,这个过程和通过caffe的binary训练是一样的

3.9 自定义循环开始训练

# Let’s run the net for a while, keeping track of a few things as it goes.

# Note that this process will be the same as if training through the caffe binary :

# *logging will continue to happen as normal

# *snapshots will be taken at the interval specified in the solver prototxt (here, every 5000 iterations)

# *testing will happen at the interval specified (here, every 500 iterations)

# Since we have control of the loop in Python, we’re free to do many other things as well, for example:

# *write a custom stopping criterion

# *change the solving process by updating the net in the loop

上篇使用的是%timeit

%%time

niter=200

test_interval=25

# losses will also be stored in the log

train_loss=zeros(niter)

test_acc=zeros(int(np.ceil(niter/test_interval)))

output=zeros((niter,8,10))

# the main solver loop

for it in range(niter):

solver.step(1) # SGD by Caffe

# store the train loss

train_loss[it]=solver.net.blobs[‘loss’].data

# store the output on the first test batch

# (start the forward pass at conv1 to avoid loading new data)

solver.test_nets[0].forward(start=’conv1′)

output[it]=solver.test_nets[0].blobs[‘score’].data[:8]

# run a full test every so often

# (Caffe can also do this for us and write to a log, but we show here

# how to do it directly in Python, where more complicated things are easier.)

if it % test_interval ==0:

print ‘iteration’, it, ‘ testing…’

correct=0

for test_it in range(100):

solver.test_nets[0].forward()

correct+=sum(solver.test_nets[0].blobs[‘score’].data.argmax(1)

==solver.test_nets[0].blobs[‘label’].data)

test_acc[it//test_interval]=correct/1e4

… …

iteration 0 testing…

iteration 25 testing…

iteration 50 testing…

iteration 75 testing…

iteration 100 testing…

iteration 125 testing…

iteration 150 testing…

iteration 175 testing…

CPU times: user 19.4 s, sys: 2.72 s, total: 22.2 s

Wall time: 20.9 s

画出训练样本损失和测试样本正确率

# plot the train loss and test accuracy

_, ax1 = subplots()

ax2 = ax1.twinx()

ax1.plot(arange(niter), train_loss)

ax2.plot(test_interval * arange(len(test_acc)), test_acc, ‘r’)

ax1.set_xlabel(‘iteration’)

ax1.set_ylabel(‘train loss’)

ax2.set_ylabel(‘test accuracy’)

ax2.set_title(‘Test Accuracy: {:.2f}’.format(test_acc[-1]))

… …

# Above pic shows the loss seems to have dropped quickly and coverged (except for stochasticity)

画出分类结果

# Since we saved the results on the first test batch, we can watch how our prediction scores evolved.

# We’ll plot time on the x axis and each possible label on the y, with lightness indicating confidence.

for i in range(8):

figure(figsize=(2,2))

imshow(solver.test_nets[0].blobs[‘data’].data[i,0],cmap=’gray’)

figure(figsize=(20,2))

imshow(output[:100,i].T,interpolation=’nearest’,cmap=’gray’) #output[:100,1]. first 100 results

NoTE:

# 上面结果开起来不错,再详细的看一下每个数字的得分是怎么变化的

We started with little idea about any of these digits, and ended up with correct classifications for each.

If you’ve been following along, you’ll see the last digit is the most difficult, a slanted “9” that’s (understandably) most confused with “4”.

使用softmax分类

# Note that these are the “raw” output scores rather than the softmax-computed probability vectors.

# below, make it easier to see the confidence of our net (but harder to see the scores for less likely digits).

for i in range(8):

figure(figsize=(2, 2))

imshow(solver.test_nets[0].blobs[‘data’].data[i, 0], cmap=’gray’)

figure(figsize=(10, 2))

imshow(exp(output[:50, i].T) / exp(output[:50, i].T).sum(0), interpolation=’nearest’, cmap=’gray’)

xlabel(‘iteration’)

ylabel(‘label’)

… …

输出结果图片对比更明显, 这是上面代码的计算方式能够把低的分数和高的分数两极分化:

/*

for i in range(2):

figure(figsize=(2,2))

imshow(solver.test_nets[0].blobs[‘data’].data[i,0],cmap=’gray’)

figure(figsize=(20,2))

imshow(exp(output[:100,i].T)/exp(output[:100,i].T).sum(0),interpolation=’nearest’,cmap=’gray’)

*/

3.10 下一步

# now we’ve defined, trained, and tested LeNet

# there are many possible next steps:

1. 定义新的结构(如加全连接层,改变relu等)

2. 优化lr等参数 (指数间隔寻找如0.1 0.01 0.001)

3. 增加训练时间

4. 由sgd–》adam

5. 其他

http://caffe.berkeleyvision.org/tutorial/interfaces.html

blog.csdn.net/thystar/article/details/50668877

https://github.com/BVLC/caffe/blob/master/examples/00-classification.ipynb

https://github.com/BVLC/caffe/blob/master/examples/01-learning-lenet.ipynb