turtlebot移动机器人(23):真实环境定位导航

Building a Map

TurtleBot maps are build with gmapping. You can tweak this algorithm by modifying parameters on launch/includes/_gmapping.launch file.

First, you need to start the robot drivers ,

and then start the ROS node responsible for building the map.

It has to be noted that to build a map ROS uses the gmapping software package, that is fully integrated with ROS.

The gmapping package contains a ROS wrapper for OpenSlam’s Gmapping. The package provides laser-based SLAM (Simultaneous Localization and Mapping), as a ROS node called slam_gmapping.

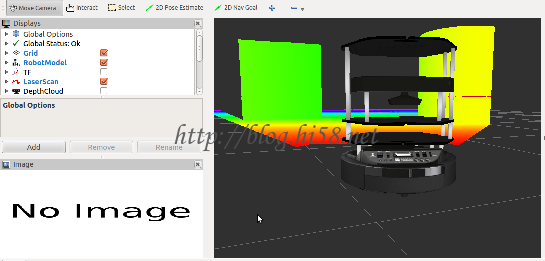

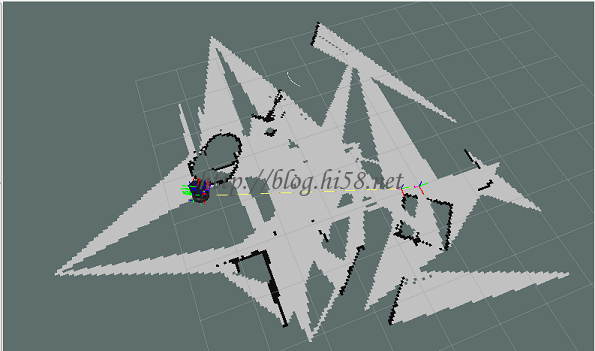

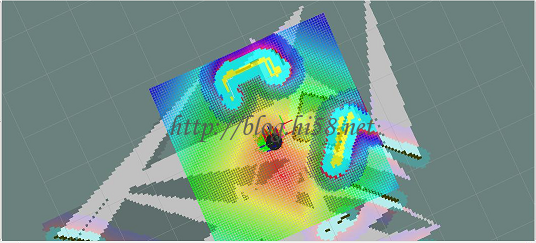

Using slam_gmapping, you can create a 2-D occupancy grid map (like a building floorplan) from laser and pose data collected by a mobile robot.

On terminals:

$ roscore

$ roslaunch turtlebot_bringup minimal.launch

$ roslaunch turtlebot_navigation gmapping_demo.launch

Move the robot :

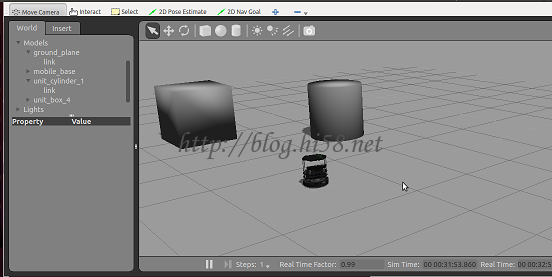

$ roslaunch turtlebot_rviz_launchers view_navigation.launch

$ roslaunch turtlebot_teleop keyboard_teleop.launch

save the built map :

$ rosrun map_server map_saver -f /tmp/my_map

will generate two files with the name you specified in the map_server commnd.

So in this case, the two files’ names are my_map.pgm and my_map.yaml.

If you open the .yaml file you will see something like this:

image: /tmp/my_map.pgm

resolution: 0.050000

origin: [-12.200000, -12.200000, 0.000000]

negate: 0

occupied_thresh: 0.65

free_thresh: 0.196

The .yaml contains meta data about the map, including the location of image file of the map, its resolution, its origin, the occupied cells threshold, and the free cells threshold. Here are the details for the different parameters:

* image: has a pgm format which contains numeric values between 0 and 255. It represents the path to the image file containing the occupancy data; can be absolute, or relative to the location of the YAML file

* resolution: it is the resolution of the map and is expressed in meters/pixel, which means in our example 5 centimers for each cell (pixel).

* origin: The 2-D pose of the lower-left pixel in the map, as (x, y, yaw), with yaw as counterclockwise rotation (yaw=0 means no rotation). Many parts of the system currently ignore yaw.

* occupied_thresh: Pixels with occupancy probability greater than this threshold are considered completely occupied.

* free_thresh: Pixels with occupancy probability less than this threshold are considered completely free.

* egate : Whether the white/black free/occupied semantics should be reversed (interpretation of thresholds is unaffected)

Now, for the second file, that is the .pgm image file, it is just a gray-scale image of the map which you can open using any image editor program

It is recommended to use the The GNU Image Manipulation Program to open and edit PGM files.

The gray area represents an unknown non-explored space.

The white area represents the free space,

and the black area represents obstacles (e.g. walls).

You can open this file with any text editor like gedit or kate to see its content.

In what follow, we present the four first lines of a typical pgm file :

P5

# CREATOR: GIMP PNM Filter Version 1.1

600 600

255

The first line, P5 identifies the file type.

The third line identifies the width and the length in number of pixels.

The last line represents the maximum gray scale, which is this case 255 that represents the darkest value. The value 0 in a PGM file will represent a free cell.

desription of turtlebot_navigation gmapping_demo.launch:

First it starts the 3dsensor launch file which will runs either Asus Xtion Live Pro or Kinect 3D sensor of the Turtlebot.

It sets some parameters to their default values and specifies that the scan topic is refered to as /scan.

Then, it will start the gmapping.launch.xml that contains the specific parameters of the gmapping SLAM algorithm (parameters of the particle filter) that is responsible for building the map.

Finally, it will start the move_base.launch.xml that will initialize the parameters of the navigation stack, including the global planner and the local planner.

Practical Considerations

When building a map with a Turtlebot it is possible to get a good or bad map depending on several factors:

First of all, make sure that your Turtlebot robot and its laptop have full batteries before starting the mapping tasks. In fact, gmapping and rviz both consume a lot of power resources.

The quality of the generated map greatly depends on quality of range sensors and odometry sensors. For the Turtlebot, it has either the Kinect or Asus Xtion Live Pro camera with only 4 meters of range, and only 57 degree of laser beam angle, which render it not appropriate for scanning large and open space environments. Thus, if you use Turtlebot to build the map for a small environment where walls are not far from each others, it is likely that you will get an accurate map.

However, if you build the map for a large and open space environment, then it is likely that your map will not be accurate at a certain point of time. In fact, in large and open space environment, you need to drive the robot for long distance, and thus, the odometry will play a more cruicial role in building the map. Considering the fact that the odometry of the Turtlebot is not reliable and is prone to errors, these errors will be cumulative over time and will quickly compromise the quality of generated maps.

It will interesting to try using your Turtlebot to build maps for both small environment and large environment and observe the accuracy of the map for each case.

ADCANCED

gmapping package:

This package contains a ROS wrapper for OpenSlam’s Gmapping. The gmapping package provides laser-based SLAM (Simultaneous Localization and Mapping), as a ROS node called slam_gmapping. Using slam_gmapping, you can create a 2-D occupancy grid map (like a building floorplan) from laser and pose data collected by a mobile robot.

slam_gmapping package: slam_gmapping contains a wrapper around gmapping which provides SLAM capabilities.

To use slam_gmapping, you need a mobile robot that provides odometry data and is equipped with a horizontally-mounted, fixed, laser range-finder.

The slam_gmapping node will attempt to transform each incoming scan into the odom (odometry) tf frame.

For Example To make a map from a robot with a laser publishing scans on the base_scan topic:

$ rosrun gmapping slam_gmapping scan:=base_scan

10-mapping

(1) ssh to On TurtleBot :

$ roslaunch turtlebot_bringup minimal.launch

$ roslaunch turtlebot_navigation gmapping_demo.launch

(2) On local workstation :

$ roslaunch turtlebot_rviz_launchers view_navigation.launch

$ roslaunch turtlebot_teleop keyboard_teleop.launch

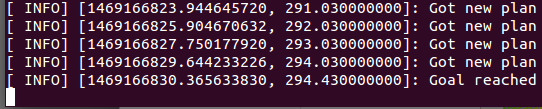

(3) using teleoperation from local machine, navigate TurtleBot around the entire area you wish to map

(4) if complate, using ssh to save the map on remote robot:

$ rosrun map_server map_saver -f map_file:=/home/dehaou1404/zMAP/my_map_half.yaml

NOTE: not use relative sunch as ~, use /home…

(5) you can find the map and yaml

$ ls /tmp/