TK1之mnist -09

深度学习有几个有名的开源框架:Caffe,Theano,Torch,其中caffe的体系是:

* Caffe has several dependencies:

-CUDA is required for GPU mode. Caffe requires the CUDA nvcc compiler to compile its GPU code and CUDA driver for GPU operation.

-BLAS via ATLAS, MKL, or OpenBLAS. Caffe requires BLAS as the backend of its matrix and vector computations. There are several implementations of this library:ATLAS, Intel MKL or OpenBLAS.

-Boost, versoin >= 1.55

-protobuf, glog, gflags, hdf5

* Optional dependencies:

-OpenCV >= 2.4 including 3.0

-IO libraries: lmdb, leveldb (note: leveldb requires snappy)

-cuDNN for GPU acceleration (v5) , For best performance, Caffe can be accelerated by NVIDIA cuDNN.

* Pycaffe and Matcaffe interfaces have their own natural needs.

-For Python Caffe: Python 2.7 or Python 3.3+, numpy (>= 1.7), boost-provided boost.python,

The main requirements are numpy and boost.python (provided by boost). pandas is useful too and needed for some examples.

-For MATLAB Caffe: MATLAB with the mex compiler. Install MATLAB, and make sure that its mex is in your $PATH.

1 – Mnist

Caffe两个demo分别是mnist和cifar10,尤其是mnist,称为caffe编程的hello world。

mnist是个知名的手写数字数据库,据说是美国中学生手写的数字,由Yan LeCun进行维护。

mnist最初用于支票上的手写数字识别, 现在成了DL的入门练习库。

对mnist识别的专门模型是Lenet,是最早的cnn模型。

一般caffe的第一个hello world 都是对手写字体minist进行识别,主要三个步骤:

准备数据、修改配置、使用模型。

REF: http://yann.lecun.com/exdb/mnist/

http://caffe.berkeleyvision.org/gathered/examples/mnist.html

1.1 准备数据

* 下载数据:

$ ./data/mnist/get_mnist.sh

完成后,在 data/mnist/目录下有四个文件:

train-images-idx3-ubyte: 训练集样本 (9912422 bytes)

train-labels-idx1-ubyte: 训练集对应标注 (28881 bytes)

t10k-images-idx3-ubyte: 测试集图片 (1648877 bytes)

t10k-labels-idx1-ubyte: 测试集对应标注 (4542 bytes)

mnist数据训练样本为60000张,测试样本为10000张,每个样本为28*28大小的黑白图片,手写数字为0-9,因此分10类。

* 转换数据:

前述数据不能在caffe中直接使用,需要转换成LMDB数据:

$ ./examples/mnist/create_mnist.sh

转换成功后,会在 examples/mnist/目录下,生成两个文件夹,分别是:mnist_train_lmdb 和 mnist_test_lmdb, 里面存放的data.mdb和lock.mdb,就是我们需要的运行数据。

1.2 关于protobuf

Protocol Buffers简称为protobuf,是由google开源的一种轻便高效的结构化数据存储格式,可以用于结构化数据的串行化或者说序列化。protobuf完成的事情XML也可以,就是把某种数据结构的信息以某种格式保存起来,用于存储、传输协议等场合。

不用XML而另起炉灶一个是考虑,另外是protobuf有比较好的多语言支持(C++、Java、Python和生成机制以及其他一些内容体。

Protocol Buffers这种协议接口的结构很简单,类似:

package caffe;# 定义名称空间

message helloworld #定义类

{ #定义 filed

required int32 xx = 1; // 必须有的值

optional int32 xx = 2; //可选值

repeated xx xx=3; //可重复的

enum xx { #定义枚举类

xx =1;

}

}

例如,开发语言为C++,场景为模块A通过socket发送巨量订单信息给模块B。

则先写一个proto文件,例如Order.proto,在该文件添加名为”Order”的message结构:

message Order

{

required int32 time = 1;

required int32 userid = 2;

required float price = 3;

optional string desc = 4;

}

然后即可用protobuf内置编译器编译该Order.proto:

$ protoc -I=. –cpp_out=. ./Order.proto

protobuf会自动生成Order.pb.cc和Order.pb.h文件。

在发送方模块A, 就可以使用下面代码来序列化/解析该订单包装类:

$ vi Sender.cpp

Order order;

order.set_time(XXXX);

order.set_userid(123);

order.set_price(100.0f);

order.set_desc(“a test order”);

string sOrder;

order.SerailzeToString(&sOrder);

// 调用socket通讯库把序列化的字符串发送出去

在接收方模块B, 可以这样解析:

$ vi Receiver.cpp

string sOrder;

// 先通过网络通讯库接收到数据,存放到某字符串sOrder

Order order;

if(order.ParseFromString(sOrder)) // 解析该字符串

{

cout << "userid:" << order.userid() << endl

<< "desc:" << order.desc() << endl;

}

else

cerr << "parse error!" << endl;

最后编译文件即可:

$ g++ Sender.cpp -o Sender Order.pb.cc -I /usr/local/protobuf/include -L /usr/local/protobuf/lib -lprotobuf -pthread

测试下。。。

1.3 关于 caffe.proto

文件caffe.proto中就是用google protobuf定义的caffe所要用到的结构化数据。

在同级文件夹下还有两个文件caffe.pb.h 和caffe.pb.cc,这两个文件是由caffe.proto编译而来的。

$ vi src/caffe/proto/caffe.proto

syntax = "proto2"; //默认就是二

package caffe; //各个结构封装在caffe包中,可以通过using namespace caffe; 或者caffe::**来调用

注意:

proto文件为结构、prototext文件为配置。

1.4 caffe结构

caffe通过layer-by-layer的方式逐层定义网络,从开始输入到最终输出判断从下而上的定义整个网络。

caffe可以从四个层次来理解:Blob、Layer、Net、Solver,这四个部分的关系是:

* Blob

blob是贯穿整个框架的数据单元。存储整个网络中的所有数据(数据和导数),它在cup和GPU之间按需分配内存开销。Blob作为caffe基本的数据结构,通常用四维矩阵 Batch×Channel×Height×Weight表示,某一坐标(n,k,h,w)的物理位置为((n * K + k) * H + h) * W + w),存储了网络的神经元激活值和网络参数,以及相应的梯度(激活值的残差和dW、db)。其中包含有cpu_data、gpu_data、cpu_diff、gpu_diff、mutable_cpu_data、mutable_gpu_data、mutable_cpu_diff、mutable_gpu_diff这一堆很像的东西,分别表示存储在CPU和GPU上的数据。其中带data的里面存储的是激活值和W、b,diff中存储的是残差和dW、db,另外带mutable和不带mutable的一对指针所指的位置是相同的,只是不带mutable的只读,而带mutable的可写。

* Layer

Layer代表了神经网络中各种各样的层,组合成一个网络。一般一个图像或样本会从数据层中读进来,然后一层一层的往后传。除了比较特殊的数据层之外其余大部分层都包含4个函数:LayerSetUp、Reshape、Forward、Backward。LayerSetup用于初始化层,开辟空间,填充初始值等。Reshape对输入值进行维度变换。Forward是前向传播,Backward是后向传播。

那么数据是如何在层之间传递的呢?每一层都会有一个(或多个)Bottom和top,分别存储输入和输出。bottom[0]->cpu_data()是存输入的神经元激活值,top就是存输出的,cpu_diff()存的是激活值的残差,gpu是存在GPU上的数据。如果这个层前后有多个输入输出层,就会有bottom[1],bottom[2]。。。每层的参数会存在this->blobs_里,一般this->blobs_[0]存W,this->blobs_[1]存b,this->blobs_[0]->cpu_data()存的是W的值,this->blobs_[0]->cpu_diff()存的梯度dW,b和db也类似,然后换成gpu是存在GPU上的数据,再带上mutable就可写了

凡是能在GPU上运算的层都会有名字相同的cpp和cu两个文件,cu文件中运算时基本都调用了cuda核函数。

* Net

Net就是把各种Layer按train_val.prototxt的定义堆叠在一起。先进行每个层的初始化,然后不断进行Update,每更新一次就进行一次整体的前向传播和反向传播,然后把每层计算得到的梯度计算进去,完成一次更新。注意每层在Backward中只是计算dW和db,而W和b的更新是在Net的Update里最后一起更新的。在caffe里训练模型的时候一般会有两个Net,一个train一个test。

* Solver

Solver是按solver.prototxt的参数定义对Net进行训练。先会初始化一个TrainNet和一个TestNet,然后其中的Step函数会对网络不断进行迭代。主要就是两个步骤反复迭代:不断利用ComputeUpdateValue计算迭代相关参数,比如计算learning rate,把weight decay加上什么;以及调用Net的Update函数对整个网络进行更新。

大致流程是:

sovler通过sovler.prototxt的参数配置初始化Net。 然后Net通过调用trainval.prototxt这些参数,来调用对应的Layer;并将数据blob输入到相应的Layer中;Layer来对流入的数据进行计算处理,然后再将计算后的blob数据返回,通过Net流向下一个Layer;每执行一次Solver就会计数一次然后调整learn_rate和descay_weith等权值。

* 一个简单的Net例子:

name:”logistic-regression”

layer{

name:”mnist”

type:”Date”

top:”data”

top:”label”

data_param{

source:”your-source”

batch_size:your-size

}

}

layer {

name:”ip”

type:”InnerProduct”

bottom:”data”

top:”ip”

inner_product_param{

num_output:2

}

}

layer {

name:”loss”

type:”SoftmaxWithLoss”

bottom:”ip”

bottom:”label”

top:”loss”}

1.5 LeNet

MNIST的手写数字识别适合采用LeNet网络,LeNet模型结构如下:

不过Caffe是改进了可激活函数Rectified Linear Unit (ReLU) 的LeNet,结构定义在lenet_train_test.prototxt中。这个改进了LeNet网络包含两个卷积层,两个池化层,两个全连接层,最后一层用于分类,像这样:

需要配置的文件有两个:

训练部分的lenet_train_test.prototxt ;

求解部分的lenet_solver.prototxt 。

1.6 配置训练部分

$ vi $CAFFE_ROOT/examples/mnist/lenet_train_test.prototxt

1.6.0 Give the network a name

name: “LeNet” #给出神经网络名称

1.6.1 Data Layer

定义TRAIN的数据层。

layer {

name: “mnist” #定义该层的名字

type: “Data” #该层的类型是数据。除了Data这个type还有MemoryData/HDF5Date/HDF5output/ImageData等等

include {

phase: TRAIN #说明该层只在TRAIN阶段使用

}

transform_param {

scale: 0.00390625 #数据归一化系数,1/256归一到[0-1]

} #预处理,例如:减均值,尺寸变换,随机剪,镜像等等

data_param {

source: “mnist_train_lmdb” #训练数据的路径,必须。

backend: LMDB #默认为使用leveldb

batch_size: 64 #批量处理的大小,数据层读取lmdb数据时每次读取64条。

}

top: “data” #该层生成一个data blob

top: “label” #该层生成一个label blob

#该层产生两个blobs,: data blobs

}

该层名称为mnist,类型为data,数据源为lmdb,批量处理尺寸为64,缩放系数为1/256=0.00390625这样区间归一化为【0-1】,该层产生两个数据块:data和label。

配置了训练参数。

1.6.2 Data Layer

定义TEST的数据层

layer {

name: “mnist”

type: “Data”

top: “data”

top: “label”

include {

phase: TEST #说明该层只在TEST阶段使用

}

transform_param {

scale: 0.00390625

}

data_param {

source: “examples/mnist/mnist_test_lmdb” #测试数据的路径

batch_size: 100

backend: LMDB

}

}

配置了测试参数。

1.6.3 Convolution Layer -1

定义卷积层1。

layer {

name: “conv1” #该层名字conv1,即卷积层1

type: “Convolution” #该层的类型是卷积层

param { lr_mult: 1 } #weight learning rate,即权重w的学习率倍数, 1表示该值是全局(lenet_solver.prototxt中base_lr: 0.01)的1倍。

param { lr_mult: 2 } #bias learning rate,即偏移值权重b的学习率倍数,,2表示该值是全局(lenet_solver.prototxt中base_lr: 0.01)的2倍。

convolution_param {

num_output: 20 #卷积输出数量20,即输出为20个特征图,其规模为(data_size-kernel_size + stride)*(data_size -kernel_size + stride)

kernel_size: 5 #卷积核的大小为5*5

stride: 1 #卷积核的移动步幅为1

weight_filler {

type: “xavier” #xavier算法是一种初始化方法,根据输入和输出的神经元的个数自动初始化权值比例

}

bias_filler {

type: “constant” ##将偏移值初始化为“稳定”状态,即使用默认值0初始化。

}

}

bottom: “data” #该层使用的数据是由数据层提供的data blob

top: “conv1” #该层生成的数据是conv1

}

该层:

参数为(20,1,5,5),(20,),

输入是(64,28,28),

卷积输出是(64,20,24,24),

数据变化情况:64*1*28*28 -> 64*20*24*24

计算过程:

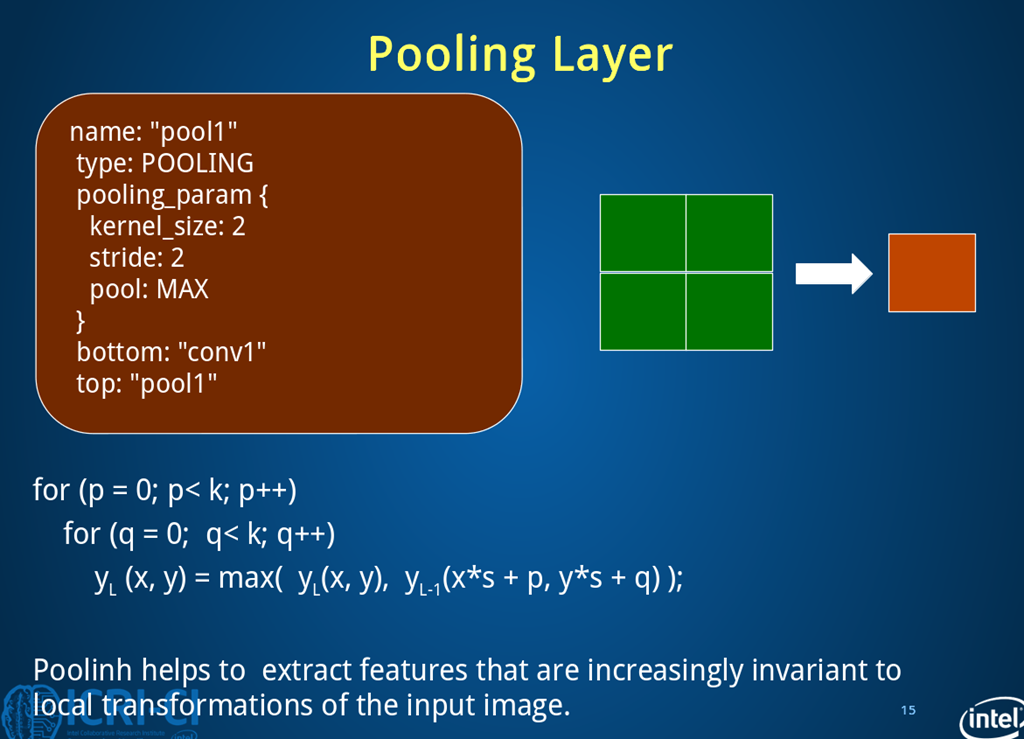

1.6.4 Pooling Layer -1

定义池化层1

layer {

name: “pool1”

type: “Pooling”

pooling_param {

pool: MAX #采用最大值池化

kernel_size: 2 #池化核大小为2*2

stride: 2 #池化核移动的步幅为2,即非重叠移动

}

bottom: “conv1” #该层使用的数据是conv1层输出的conv1

top: “pool1” #该层生成的数据是pool1

}

该层:

输出是(64,20,12,12),没有weight和biases。

过程数据变化:64*20*24*24->64*20*12*12

计算过程:

1.6.5 Conv2 Layer

剩下还有两层卷积(num=50,size=5,stride=1)和池化层

定义卷积层2

layer{

name:”conv2″

type:”Convolution”

param:{lr_mult:1}

param:{lr_mult:2}

convolution_param{

num_output:50

kernel_size:5

stride:1

weight_filler{type:”xavier”}

bias_filler{type:”constant”}

bottom:”pool1″

top:”conv2″}

}

该层:

输出是(64,50,10,10)

过程数据变化:64*20*12*12 -> 64*50*8*8

1.6.6 Pool2 Layer

定义池化层2

layer{

name:”pool2″

type:”Pooling”

bottom:”conv2″

top:”pool2″

pooling_param{

pool:MAX

kernel_size:2

stride:2

}

}

该层:

输出是(64,50,5,5)

过程数据变化:64*50*8*8 -> 64*50*4*4。

1.6.7 Fully Connected Layer -1

定义全连接层1。

caffe把全连接层曾作InnerProduct layer (IP层)。

layer {

name: “ip1”

type: “InnerProduct” #该层的类型为全连接层

param { lr_mult: 1 }

param { lr_mult: 2 }

inner_product_param {

num_output: 500 #500个输出通道

weight_filler {

type: “xavier”

}

bias_filler {

type: “constant”

}

}

bottom: “pool2”

top: “ip1”

}

该层:

参数是(500,6250)(500,),

输出是(64,500,1,1) ,

过程数据变化:64*50*4*4 -> 64*500*1*1。

此处全连接是将C*H*W转换成1D feature vector,即50*4*4=800 -> 500=500*1*1。

1.6.8 ReLU Layer

定义ReLU1层,即非线性层。

layer {

name: “relu1”

type: “ReLU” #ReLU,限制线性单元,是一种激活函数,与sigmoid作用类似

bottom: “ip1”

top: “ip1” #底层与顶层相同以减少开支

#可以设置relu_param{negative_slope:leaky-relu的浮半轴斜率}

}

该层:

输出是(64,500)不变,

过程数据变化:64*500*1*1->64*500*1*1。

Since ReLU is an element-wise operation, we can do in-place operations to save some memory. This is achieved by simply giving the same name to the bottom and top blobs. Of course, do NOT use duplicated blob names for other layer types!

1.6.9 innerproduct layer -2

定义全连接层2。

layer {

name: “ip2”

type: “InnerProduct”

param { lr_mult: 1 }

param { lr_mult: 2 }

inner_product_param {

num_output: 10 #10个输出数据,对应0-9十个手写数字

weight_filler {

type: “xavier”

}

bias_filler {

type: “constant”

}

}

bottom: “ip1”

top: “ip2”

}

该层:

参数为(10,500)(10,),

输出为(64,10),

过程数据变化:64*500*1*1 -> 64*10*1*1

1.6.10 Loss Layer

定义损失函数估计层 。

layer {

name: “loss”

type: “SoftmaxWithLoss” #多分类使用softMax回归计算损失

bottom: “ip2”

bottom: “label” #需要用到数据层产生的lable,这里终于可以用上了label,没有top

}

该损失层过程数据变化:64*10*1*1 -> 64*10*1*1

1.6.11 accuracy layer

准确率层 。caffe LeNet中有一个accuracy layer的定义,只在TEST中计算准确率,只有name,type,buttom,top,include{phase:TEST}几部分,这是输出测试结果的一个层。

layer {

name: “accuracy”

type: “Accuracy”

bottom: “ip2”

bottom: “label”

top: “accuracy”

include {

phase: TEST

}

}

1.6.12 整个过程

*** 整个过程

REF: https://www.cnblogs.com/xiaopanlyu/p/5793280.html

1.6.13 Additional Notes: Writing Layer Rules

By default, that is without layer rules, a layer is always included in the network.

Layer definitions can include rules for whether and when they are included in the network definition, like:

layer {

// …layer definition…

include: { phase: TRAIN }

}

This is a rule, which controls layer inclusion in the network, based on current network’s state.

1.7 配置求解部分

有了prototxt,还缺一个solver, solver主要是定义模型的参数更新与求解方法。例如最后一行::

solver_mode: GPU,

修改GPU为CPU。 这样使用cpu训练。不管使用GPU还是CPU,由于MNIST 的数据集很小, 不像ImageNet那样明显。

$ vi ./examples/mnist/lenet_solver.prototxt

#指定训练和测试模型

net: “examples/mnist/lenet_train_test.prototxt” #网络模型文件的路径

# 指定测试集中多少参与向前计算,这里的测试batch size=100,所以100次可使用完全部10000张测试集。

# test_iter specifies how many forward passes the test should carry out. In the case of MNIST, we have test batch size 100 and 100 test iterations, covering the full 10,000 testing images.

test_iter: 100 #test的迭代次数,批处理大小为100, 100*100为测试集个数

# 每训练test_interval次迭代,进行一次训练.

# Carry out testing every 500 training iterations.

test_interval: 500 #训练时每迭代500次测试一次

# The base learning rate, momentum and the weight decay of the network.

base_lr: 0.01 # 基础学习率.

momentum: 0.9 #动量

weight_decay: 0.0005 #权重衰减

# 学习策略

lr_policy: “inv” # 学习策略 inv return base_lr * (1 + gamma * iter) ^ (- power)

gamma: 0.0001

power: 0.75

# 每display次迭代展现结果

display: 100

# 最大迭代数量

max_iter: 10000

# snapshot intermediate results

snapshot: 5000 #每迭代5000次存储一次参数

snapshot_prefix: “examples/mnist/lenet” #模型前缀,不加前缀为iter_迭代次数.caffmodel,加之后为lenet_iter_迭代次数.caffemodel

# solver mode: CPU or GPU

solver_mode: GPU

支持的求解器类型有:

Stochastic Gradient Descent (type: “SGD”),

AdaDelta (type: “AdaDelta”),

Adaptive Gradient (type: “AdaGrad”),

Adam (type: “Adam”),

Nesterov’s Accelerated Gradient (type: “Nesterov”) and

RMSprop (type: “RMSProp”)

1.8 训练模型

完成网络定义protobuf和solver protobuf文件后,训练很简单:

$ cd ~/caffe

$ ./examples/mnist/train_lenet.sh

—

读取lenet_solver.prototxt和lenet_train_test.prototxt两个配置文件

… …

创建每层网络: 数据层 – 卷积层(conv1) – 池化层(pooling1) – 全连接层(ip1) -非线性层 -损失层

… …

开始计算反馈网络

… …

输出测试网络和创建过程

… …

开始优化参数

… …

进入迭代优化过程

I1203 solver.cpp:204] Iteration 100, lr = 0.00992565

I1203 solver.cpp:66] Iteration 100, loss = 0.26044

I1203 solver.cpp:84] Testing net

I1203 solver.cpp:111] Test score #0: 0.9785

I1203 solver.cpp:111] Test score #1: 0.0606671

#0:为准确度,

#1:为损失

For each training iteration, lr is the learning rate of that iteration, and loss is the training function. For the output of the testing phase, score 0 is the accuracy, and score 1 is the testing loss function. And after a few minutes, you are done!

整个训练时间会持续很久,20分钟,最后模型精度在0.989以上。

最终训练完的模型存储为一个二进制的protobuf文件位于:

./examples/mnist/lenet_iter_10000.caffemodel

至此,模型训练完毕。等待以后使用。

REF: http://caffe.berkeleyvision.org/gathered/examples/mnist.html

1.9、使用模型

使用模型识别手写数字,有人提供了python脚本,识别手写数字图片:

$ vi end_to_end_digit_recognition.py

# manual input image requirement: white blackgroud, black digit

# system input image requirement: black background, white digit

# loading settup

caffe_root = “/home/ubuntu/sdcard//caffe-for-cudnn-v2.5.48/”

model_weights = caffe_root + “examples/mnist/lenet_iter_10000.caffemodel”

model_def = caffe_root + “examples/mnist/lenet.prototxt”

image_path = caffe_root + “data/mnist/sample_digit_1.jpeg”

# set up Python environment: numpy for numerical routines, and matplotlib for plotting

import numpy as np

import scipy

import os.path

import time

# import matplotlib.pyplot as plt

from PIL import Image

import sys

sys.path.insert(0, caffe_root + ‘python’)

import caffe

# caffe.set_mode_cpu()

caffe.set_device(0)

caffe.set_mode_gpu()

# setup a network according to model setup

net = caffe.Net(model_def, # defines the structure of the model

model_weights, # contains the trained weights

caffe.TEST) # use test mode (e.g., don’t perform dropout)

exist_img_time=0

while True:

try:

new_img_time=time.ctime(os.path.getmtime(image_path))

if new_img_time!=exist_img_time:

# read image and convert to grayscale

image=Image.open(image_path,’r’)

image=image.convert(‘L’) #makes it greyscale

image=np.asarray(image.getdata(),dtype=np.float64).reshape((image.size[1],image.size[0]))

# convert image to suitable size

image=scipy.misc.imresize(image,[28,28])

# since system require black backgroud and white digit

inputs=255-image

# reshape input to suitable shape

inputs=inputs.reshape([1,28,28])

# change input data to test image

net.blobs[‘data’].data[…]=inputs

# forward processing of network

start=time.time()

net.forward()

end=time.time()

output_prob = net.blobs[‘ip2’].data[0] # the output probability vector for the first image in the batch

print ‘predicted class is:’, output_prob.argmax()

duration=end-start

print duration, ‘s’

exist_img_time=new_img_time

except IndexError:

pass

except IOError:

pass

except SyntaxError:

pass

测试:

$ python ./end_to_end_digit_recognition.py

—

I1228 17:22:30.683063 19537 net.cpp:283] Network initialization done.

I1228 17:22:30.698748 19537 net.cpp:761] Ignoring source layer mnist

I1228 17:22:30.702311 19537 net.cpp:761] Ignoring source layer loss

predicted class is: 3

0.0378859043121 s

结果识别出来 是“ 3 ”。