机器人操作系统ROS(25):TF框架

线性代数和矩阵论

惯性导航里的dcm、四元数

刚体力学

欧拉角

1、预备

在机器人的很多api中,存在着target frame,source frame,parent frame,child frame,这些不同名字的坐标系。

* source frame、target frame 是坐标变换的概念,source是坐标变换的源坐标系,一般称参考坐标系,target是目标坐标系,这个是把物体在source坐标系内的运动变换到target坐标系内的运动。

* parent frame、child frame是坐标系变换的概念,parent是原坐标系,child是变换后的坐标系,从parent坐标系到child坐标系的变换也就是child坐标系在parent坐标系下的描述。如果把child固结于一个刚体,那么这个变换描述的就是刚体在parent坐标系下的姿态。

这里有个巧合,当然也不是巧合,就是把child内的运动描述变换为parent内的运动描述、等于从parent到child的坐标系的变换,牵扯到线性代数里的基变换、线性变换、过渡矩阵的概念。

在线性空间里,一个矩阵不但可以描述在同一个基下把一个点运动到另一个点的线性变换,还可以描述一个基在另外一个基下的表示,也可以表示一个基到另一个基的线性变换。不过,如果单说坐标变换就简单了。所谓坐标变换就是把一个点在某个坐标系的描述,变换成在另外一个坐标系下的描述。要实现这个变换,比较简单的做法就是用当前坐标系在参考坐标系下的描述(一个矩阵)去描述(乘以)这个点在当前坐标系下的描述(坐标)。

经过多次旋转的坐标系该怎么在固定坐标系下描述呢。这里有一个前乘和后乘的问题。什么时候前,什么时候后?很简单,绕固定坐标系的轴旋转就前乘,绕动坐标系就后乘。为什么是这样呢?解释起来很复杂,但可以这样理解,绕固定轴旋转时,你把这个旋转看做是线性变换,这个变换把原来的矩阵里的各列向量旋转变换成了新的向量,所以前乘。当绕动坐标系旋转时,你把这个旋转矩阵看做是一个描述了,并且这个描述是在未乘之前的矩阵这个描述下的描述,所以要放到后面。

刚体的任一姿态(任一动坐标系)可以经由三次基本旋转得到,用三个角来描述,这就是欧拉角。在tf里eulerYPR指的是绕动坐标系的zyx旋转,RPY指的是绕固定坐标系xyz旋转,这二者等价,坐标系定义为右手,x前,y左,z上。

四元数是刚体姿态的另一种描述方式,理论基础是,刚体姿态可以经过某一特定轴经一次旋转一定角度得到,等价于欧拉角,等价于旋转矩阵。一个四个参数,一个三个参数,一个九个参数,之所以等价是因为四个参数里有一个约束,九个参数里有六个约束。

1.1、ROS_TF

tf库的目的是实现系统中任一个点在所有坐标系之间的坐标变换,也就是说,只要给定一个坐标系下的一个点的坐标,就能获得这个点在其他坐标系的坐标。

为了达到这个目的,就需要确定各个坐标系之间的关系,也就是获得任一坐标系在其他任一个坐标系下的描述. 假设有n个坐标系,那么他们之间的组合关系有c(n,2)个,如果n=4,那就是6种,如果n=100,那就是4950个,好多,不过世界没那么复杂,我们可以给这些坐标系之间构建一个结构,比如单叉树或者单层多叉树,那么100个坐标系之间的关系,就可以用99个树杈搞定。tf里的选择则是多层多叉树。

维护一颗树,需要先设计一个树的结构,也就是一定要在树被风吹来吹去变换的时候,让小树杈找个大树杈依附,让大树杈找个树干依附,让树干有个根固定。(这里提个建议,如果打算用tf解决坐标变换问题,请一定要先清晰的画出这棵树的结构,再开始写程序;因为这个结构是维护和使用的基础)。这个结构的建立和维护靠的是tf提供的tfbroadcastor类的sendtransform接口。在tb第一次发布一个从已有的parent frame到新的child frame的坐标系变换时,这棵树就会添加一个树枝,之后就是维护。

如何使用这棵树?很简单,用tf的tflisener监听某一个指定的从一个a frame到b frame的变换即可,当然前提是树上的树杈们能把a,b联通,并已经准备好。再重复一次,这个变换是a frame到b frame的变换,也表示了b frame在a frame的描述,也代表了把一个点在b frame里的坐标变换成在a frame里的坐标的坐标变换。有了这个变换,你就可以尽情的变换了。

实现的原理,非常简单,也非常粗暴。基本原理是,tb的类里有个publisher,tl的类里有个subscriber,一个发布叫/tf的topic,一个订阅这个topic,传送的消息tfmessage里包含了parent frameid和child frameid的信息。

这个机制意味着,所有的tb会发布某一特定的parent到child的变换,而所有tl会收到所有的这些变换,然后tl利用一个tfbuffercore的数据结构维护一个完整的树结构及其状态。基于此,tl在使用这棵树时,会用lookuptransform或waitfortransform来获得任意坐标系之间的变换。

非常简单,但粗暴的也非常显然。那就是只要是一个tl,就要跟所有tb建立连接,就要收取所有的/tf消息,来维护一棵完整的树,并且,还要负责搜索这棵树,找到一条变换的路径,然后乘呀乘,并且每次都要如此。

1.2、tf库的优缺点

先说优点,能用且易上手:

a、各种数值计算的细节,不用考虑,tf库可以帮你;

b、接口很简洁,会广播和监听就ok;

c、问题找的很准,那就是需要维护坐标系之间的关系;

d、提供了很多工具程序;

e、考虑了与时间相关的变换;

f、支持tf-prefix,可以在多机器人上用

g、基本能用,且不需要深入理解,死搬硬套就能用起来。

还是说缺点吧。还是那句话,简单粗暴!

a、树的结构很简单,但有时候很笨拙。举个例子吧。有部电影叫手机,葛优扮演的叫石头,范伟演的叫砖头,两个人是叔伯兄弟,从小到大的小伙伴,应该很熟悉,关系也很明确,但要放到树的结构下,就需要从下到上找共同先辈,然后从这个先辈再往下找,进而确定二者的关系,这个比较笨拙。于是有了范伟坐在门槛上的一句台词:恁这是弄啥勒,恁奶不是俺奶?!

b、每个tl都要维护同一颗树,这样的开销太大,主要是网络传输的负荷比较大。这一点,tf的设计者是完全承认的。举个例子吧,北方的有些地方的农村过年时要拜年,拜年要磕头,磕头的对象是写有逝去的先辈名字的树状图,一个同姓大家族里有很多小家庭,每个家里都有那么一张图,挨家拜年,街上人来人往,每个人都磕了很多头,磕的其实是同一张。如果有个祠堂,集中处理,维护一张图,就不用看到早早起床、满街跑的情况,效率也会高多了。

c、很难满足实时性的要求,这一点比较显然。要不tf也不会每个变换存10秒钟的数据,不过源码里好像是开了一个存100个消息的队列。

d、还有很多细节不易理解。比如,now()和time(0);比如,技术文档里的一些术语名词;比如,采用了机器人里的习惯,与飞行器,惯导,车辆里的习惯区别较大,使用时不能相当然。

综上,用不用tf需要平衡,那里用,那里不用,用什么代替,也需要平衡,不是一件很轻松愉快的事。

2、正式开始

机器人具有多个坐标框架,例如world/ base/ gripper/ head, 例如target frame,source frame,parent frame,child frame, 通过tf随时更新这些框架,就可以回答以下问题:

Where was the head frame relative to the world frame, and 5 seconds ago?

What is the pose of the object in my gripper relative to my base?

What is the current pose of the base frame in the map frame?

一个矩阵实际上定义了一个描述的视角。对空间里的一个点做描述。一个点比较绝对的描述是MX,其中M是坐标系的姿态,X是坐标向量,MX的含义就是在坐标系M里坐标为X的那个点。对于Y=MX,可以看作是IY=MX。也就是说,在I坐标系里坐标为Y的一个点和在M系里坐标为X的是同一个点。

所以,虚拟世界里的工程师安德森与锡安里的尼欧是同一个点在不同坐标系下不同的坐标描述。

1.极简教程,tf introduction demo

2. tf调试指令

2.7 static_transform_publisher

3. tf APIs

3.2 Transformations and Frames

3.3 Broadcasting Transforms

3.4 Using Published Transforms

4. Writing a tf broadcaster

5. Writing a tf listener

6. Adding a frame

7. About tf and time

8. Time travel with tf

9. debug a typical tf problem

10. Using sensor messages with tf

11. Setting up your robot using tf

12. Publishing Sensor Streams Data Over ROS

13. Using robot state publisher

14 Using urdf with robot_state_publisher

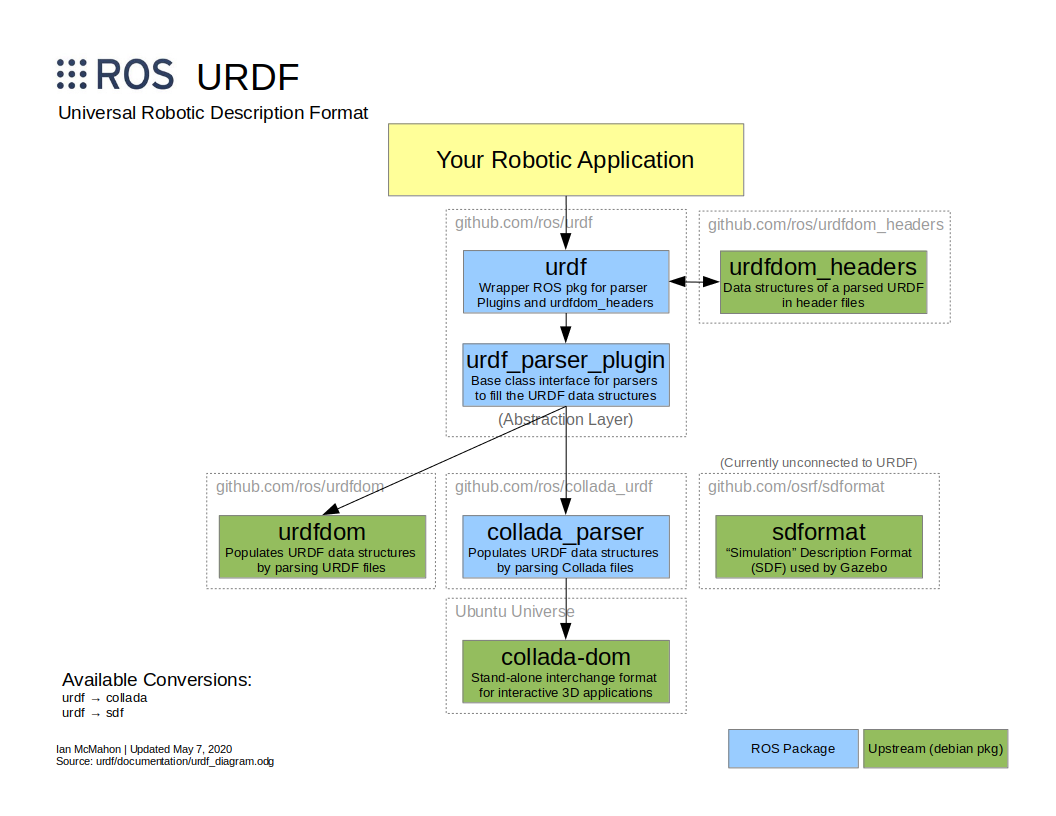

So far, a number of different packages and components make up urdf:

NOTE:坐标变换,有个很巧合的,child frame在parent frame的姿态描述(也就是把一个在child的point转化为在parent的描述)等于从parent到child的frame transform。

所谓坐标变换,就是把一个点在某个坐标系的描述,变换成在另外一个坐标系下的描述。

要实现这个变换,简单做法就是用当前坐标系在参考坐标系下的描述矩阵,去乘这个点在当前坐标系下的坐标。

所以我们知道当前坐标系在参考坐标系的描述,就可以把当前坐标系里任一点的坐标变换成参考坐标系里的坐标。

TF树结构:

假设有n个坐标系,那么他们之间的组合关系有c(n,2)个. 如果n=4,那就是6种. 如果n=100,那就是4950个.TF树的结构特点是单亲,因为怕矛盾.

维护树:TF树结构的建立和维护是靠tf提供的tfbroadcastor的sendtransform接口。tb发布一个从已有的parent frame到新的child frame的坐标系变换时,这棵树就会添加一个树枝,之后就是维护。

使用树:用tf的tflisener监听某一个指定的从一个a frame到b frame的变换即可,当然前提是树上的树杈能把a,b联通。这个变换是a frame到b frame的变换,也表示了b frame在a frame的描述,也代表了把一个点在b frame里的坐标变换成在a frame里的坐标的坐标变换。

2.1.极简教程,tf introduction demo

It shows off some of the tf power in a multi-robot example using turtlesim.

This also introduces using tf_echo, view_frames, rqt_tf_tree, and rviz.

1.1 安装依赖

$ sudo apt-get install ros-indigo-ros-tutorials ros-indigo-geometry-tutorials ros-indigo-rviz ros-indigo-rosbash ros-indigo-rqt-tf-tree

1.2 运行

$ roslaunch turtle_tf turtle_tf_demo.launch

可见两支小乌龟

1.3 跟随

用键盘方向键控制移动,可见一只始终跟随另一只。

图

1.4 实现

这是通过tf库构建三个框架而实现的:world , frame/turtle1 , frame/turtle2 frame。

还使用了广播机制:一只龟发布坐标,另一只龟监听坐标并计算差异实现跟从。

This tutorial uses a tf broadcaster to publish the turtle coordinate frames , and , a tf listener to compute the difference in the turtle frames and move one turtle to follow the other.

2.2. tf调试指令

Although tf is mainly a code library here comes with a large set of command-line tools that assist in the debugging and creation of tf coordinate frames.

2.1 $ roswtf

This plugin will analyze your current tf configuration and attempt to find common problems. some warnning msg can be ignored.

2.2 view_frames

$ rosrun tf view_frames

– Detected dot version 2.26.3

– frames.pdf generated

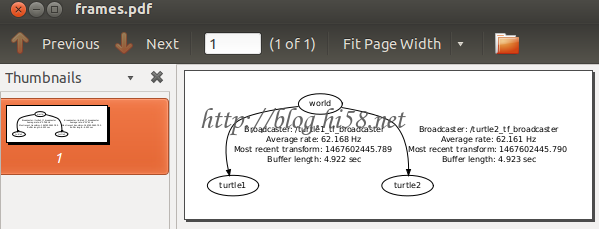

这个指令建立tf经由ROS发布的框架的框图。此时tf监听器 监听经由ROS发布的框架并绘制树形图 frames.pdf。

查看该树形图:

$ evince frames.pdf

可见tf正在广播三个框架:world/ turtle1/ turtle2,还额外显示了一些附加信息。

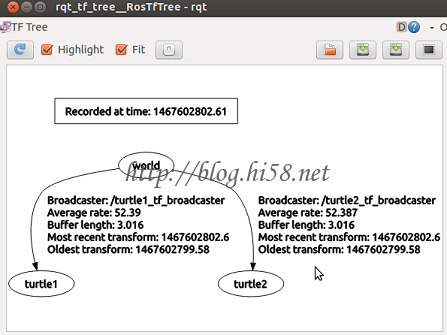

2.3 rqt_tf_tree

这个也可以可视化正在广播的框架的树形图,并且可以刷新。

$ rosrun rqt_tf_tree rqt_tf_tree

也可以:

$ rqt

select plugins–visulization–tftree.

2.4 tf_echo

tf_echo 该指令汇报经由ROS发布的两个框架的转换

$ rosrun tf tf_echo turtle1 turtle2

At time 1416409795.450

– Translation: [0.000, 0.000, 0.000]

– Rotation: in Quaternion [0.000, 0.000, 0.914, 0.405]

in RPY [0.000, -0.000, 2.308]

2.5 rviz

这指令更具有可视化

rosrun rviz rviz -d `rospack find turtle_tf`/rviz/turtle_rviz.rviz

驱动turtle1,这里可视化观测。通过-d指明了配置文件位置。

2.6 tf_monitor

$ rosrun tf tf_monitor

$ rosrun tf tf_monitor

Monitor a specific transform. For example to monitor the transform from /base_footprint to /odom:

$ rosrun tf tf_monitor /base_footprint /odom

$ rosrun tf tf_monitor /base_footprint /odom

RESULTS: for /base_footprint to /odom

Chain currently is: /base_footprint -> /odom

Net delay avg = 0.00371811: max = 0.012472

Frames:

Frame: /base_footprint published by /robot_pose_ekf Average Delay: 0.0465218 Max Delay: 0.051754

Frame: /odom published by /realtime_loop Average Delay: 0.00062444 Max Delay: 0.001553

Broadcasters:

Node: /realtime_loop 95.3222 Hz, Average Delay: 0.00062444 Max Delay: 0.001553

Node: /robot_pose_ekf 30.9654 Hz, Average Delay: 0.0465218 Max Delay: 0.051754

Node: /robot_state_publisher 25.9839 Hz, Average Delay: 0.00903061 Max Delay: 0.00939562

Here is:

$ rosrun tf tf_monitor turtle1 turtle2

2.7 static_transform_publisher

a) $ static_transform_publisher x y z yaw pitch roll frame_id child_frame_id period_in_ms

Which publish a static coordinate transform to tf using an x/y/z offset in meters and yaw/pitch/roll in radians.

The period, in milliseconds specifies how often to send a transform, 100ms (10hz) is a good value.

b) $ static_transform_publisher x y z qx qy qz qw frame_id child_frame_id period_in_ms

It publish a static coordinate transform to tf using an x/y/z offset in meters and quaternion. The period, in milliseconds, specifies how often to send a transform. 100ms (10hz) is a good value.

c) launch

Not only static_transform_publisher is designed both as a command-line tool for manual use,

bu also for use within roslaunch files for setting static transforms.

For example:

2.3. tf APIs

TF涉及的集中数据类型。

3.1 Data Types

data types are defined in file tf/transform_datatypes.h:

Type——–tf

Point——-tf::Point

Vector——tf::Vector3

Quaternion–tf::Quaternion

Pose——–tf::Pose

Transform—tf::Transform

3.2 Transformations and Frames

Remeber coordinate systems in ROS are always in 3D and right-handed, with X forward, Y left, and Z up.

a) A frame,is a coordinate system.

b) Points within a frame,are represented using tf::Point, which is equivalent to the bullet type btVector3. The coordinates of a point p in a frame W are written as Wp.

c) Frame Pose, The relationship between two frames is represented by a 6 DOF relative pose: a translation, and followed by a rotation.

If W and A are two frames, the pose of A in W,is given by translation from W origin to A origin, and, rotation of A coordinate axes in W coordinate.

The translation is a vector in W coordinates, represented as WtA which read as: “Translation from frame W to A coordinate system”,, which is represented by tf::Vector3.

The rotation of A is given by a rotation matrix, represented as WAR which read as: “Rotation of frame A in W coordinate system”,

NOTE: There is no tf type for a rotation matrix; instead, tf represents rotations via tf::Quaternion, which has methods for creating quaternions from rotation matrices, and vice versa.

SO: It’s convenient to describe the translation + rotation in coordinates as a single 4×4 matrix WAT which can be read as: “the pose of frame A relative to frame W.” .

The 4×4 matrix WAT is constructed both rotation WAR and linear WtA.

d) Point Mappings

There is a duality between frame poses and mapping points from one frame to another.

The pose WAT can also be read as “transform a point in A frame to W.” The syntax gives a “cancellation” of the A frame is: WAT * Ap = Wp.

e) Transform Inverse

The inverse of a transform WAT is the transform AWT. The inverse maps points in the reverse direction from the original transform. Inverses are conveniently calculated using the inverse() member function of btTransform.

3.3 Broadcasting Transforms

To broadcast transforms within a node, we recommend using the tf::TransformBroadcaster.

tf::TransformBroadcaster();

To send a transform call sendTransform() with a fully populated transform.

void sendTransform(const StampedTransform & transform);

void sendTransform(const geometry_msgs::TransformStamped & transform);

3.4 Using Published Transforms

For most purposes using tf will be done using the tf::TransformListener.

It inherits several methods from tf::Transformer.

The primary methods which should be used when interacting with a tf::TransformListener are:

几种方法:

a) Constructors

TransformListener(const ros::NodeHandle &nh, ros::Duration max_cache_time=ros::Duration(DEFAULT_CACHE_TIME), bool spin_thread=true)

e.g.

TransformListener(ros::Duration max_cache_time=ros::Duration(DEFAULT_CACHE_TIME), bool spin_thread=true)

b) Helper methods

std::string tf::TransformListener::resolve (const std::string &frame_id)

Determine the fully resolved frame_id obeying the tf_prefix.

d) waitForTransform

Test if source_frame can be transformed to target_frame at time time.

The waitForTransform() methods return a bool whether the transform can be evaluated. It will sleep and retry every polling_duration until the duration of timeout has been passed.

g) lookupTransform

lookupTransform() is a lower level method which returns the transform between two coordinate frames. This method is the core functionality of the tf library, however most often the transform* methods will be used by the end user. This methods is designed to be used within transform*() methods.

The direction of the transform returned will be from the target_frame to the source_frame. Which if applied to data, will transform data in the source_frame into the target_frame.

3.5 Exceptions

tf::ConnectivityException

tf::ExtrapolationException

tf::InvalidArgument

tf::LookupException

3.6 tf_prefix

To support multiple “similar” robots tf uses a tf_prefix parameter. Without a tf_prefix parameter the frame name “base_link” will resolve to frame_id “/base_link”.

Next, 4 and 56 will setup above example by ourself.

先总结:

a. turtle_broadcaster节点,订阅了turtle1的姿态主题pose,然后根据pose发布world->tuetle1的tf框架变换。通常pose时定周期发布的,因此tf变换也是定周期发布。

b. turtle_listener节点,在一个死循环里面查询turtle2->turtle1的tf框架变换,然后根据框架关系,发布计算后的速度信号cmd_vel给turtle2。问题:首先速度没有pid也没有插补,其次由于tf定周期发布,所以这个节点也就不停的发布速度信号,电机废了。

c. frame_tf_broadcaster

实际的应用:

a. 下位机,发布里程信号odom。

b. pc的amcl订阅odom,得到自身位置以及速度信号twist,发布出去。

2.4. Writing a tf broadcaster

4.1 准备

$ cd ~/catkin_ws/src

$ catkin_create_pkg learning_tf tf roscpp rospy turtlesim

$ cd ..

$ catkin_make

$ source ./devel/setup.bash

4.2 代码

This teaches you how to broadcast coordinate frames to tf.

We want to broadcast the changing coordinate frames of the turtles while they move around.

这段代码在teleop操作turtlebot_name时候,随时广播world->turtlebot_name在world的变换,在tf_tree上建立了world->turtlebot_name这条边。

$ roscd learning_tf

$ nano src/turtle_tf_broadcaster.cpp

#include

#include //an implementation of a TransformBroadcaster

#include

std::string turtle_name;

void poseCallback(const turtlesim::PoseConstPtr& msg){

static tf::TransformBroadcaster br; //create a TransformBroadcaster object

tf::Transform transform; // create a Transform object

transform.setOrigin( tf::Vector3(msg->x, msg->y, 0.0) );

tf::Quaternion q; q.setRPY(0, 0, msg->theta);

transform.setRotation(q);

br.sendTransform(tf::StampedTransform(transform, ros::Time::now(), “world”, turtle_name)); //This is where the real work is done

///NOTE///: sendTransform and StampedTransform have opposite ordering of parent and child.

}

int main(int argc, char** argv){

ros::init(argc, argv, “my_tf_broadcaster”);

if (argc != 2){ROS_ERROR(“need turtle name as argument”); return -1;};

turtle_name = argv[1];

ros::NodeHandle node;

ros::Subscriber sub = node.subscribe(turtle_name+”/pose”, 10, &poseCallback);

ros::spin();

return 0;

};

修改CMakeLists.txt,在尾部添加:

$ vi CMakeLists.txt

add_executable(turtle_tf_broadcaster src/turtle_tf_broadcaster.cpp)

target_link_libraries(turtle_tf_broadcaster ${catkin_LIBRARIES})

cd ~/catkin_ws

$ catkin_make

二进制文件turtle_tf_broadcaster生成于devel/lib/learning_tf 之下。

4.3 脚本start_demo.launch

$ roscd learning_tf

$ mkdir launch

$ nano launch/start_demo.launch

4.4 测试

$ roslaunch learning_tf start_demo.launch

只显示一只乌龟

查看是否在发布乌龟姿态

$ rosrun tf tf_echo /world /turtle1

–ok, showing x y z r p y data.

$ rosrun tf tf_echo /world /turtle2

–fail, because tutle2 was not exist here.

2.5. Writing a tf listener

already we created a tf broadcaster to publish the pose of a turtle to tf.

Here we’ll create a tf listener to start using tf.

NOTE: it will NOT compile on ROS Hydro, due to a slight change in the naming of certain messages and topics.

$ roscd learning_tf

$ nano src/turtle_tf_listener.cpp

#include

#include

#include

#include

int main(int argc, char** argv){

ros::init(argc, argv, “my_tf_listener”);

ros::NodeHandle node;

ros::service::waitForService(“spawn”);

ros::ServiceClient add_turtle =

node.serviceClient(“spawn”);

turtlesim::Spawn srv;

add_turtle.call(srv);

ros::Publisher turtle_vel =

node.advertise(“turtle2/cmd_vel”, 10);

tf::TransformListener listener;

ros::Rate rate(10.0);

while (node.ok()){

tf::StampedTransform transform;

// try{

// listener.lookupTransform(“/turtle2”, “/turtle1”, ros::Time(0), transform);

// }

// catch (tf::TransformException &ex) {

// ROS_ERROR(“%s”,ex.what());

// ros::Duration(1.0).sleep();

// continue;

// }

// NOTE:If error “Lookup would require extrapolation into the past” while running,

// try this alternative code to call the listener

try { // We want the transform from frame /turtle1 to frame /turtle2.

listener.waitForTransform(“/turtle2”, “/turtle1”, ros::Time(0), ros::Duration(10.0) );

listener.lookupTransform(“/turtle2”, “/turtle1”, ros::Time(0), transform );

} catch (tf::TransformException &ex) {

ROS_ERROR(“%s”,ex.what());

}

geometry_msgs::Twist vel_msg;

// the transform is used to calculate new linear and angular velocities for turtle2 , based on its distance and angle from turtle1

// The new velocity is published in the topic “turtle2/cmd_vel” and the sim will use that to update turtle2’s movement.

vel_msg.angular.z = 4.0 * atan2(transform.getOrigin().y(),

transform.getOrigin().x());

vel_msg.linear.x = 0.5 * sqrt(pow(transform.getOrigin().x(), 2) +

pow(transform.getOrigin().y(), 2));

turtle_vel.publish(vel_msg);

//while transform of t2 -> t1 is iavailable then cal new linear and angular data for turtle2

//based on its distance and angle from turtle1

//New velocity is published in the topic “turtle2/cmd_vel”

rate.sleep();

}

return 0;

}

HINTS: turtle2 is the dest child we needed, tutlr1 is the source parent we based.

$ vi CMakeLists.txt

add the following line on the bottom:

add_executable(turtle_tf_listener src/turtle_tf_listener.cpp)

target_link_libraries(turtle_tf_listener ${catkin_LIBRARIES})

$ cd ~/catkin_ws

$ catkin_make

ok

$ vi launch/start_demo.launch

merge the node block below inside the block:

…

测试

$ roslaunch learning_tf start_demo.launch

You should see the turtlesim with two turtles.

$ rosrun tf tf_echo /turtle1 /turtle2

ok

问题一:出现 Frame id /turtle2 does not exist! When trying to transform between /turtle1 and /turtle2.

这是由于listener在接收到关于uturtle2的消息之前它就尝试计算变化变换,因为turtlesim构造turtle以及开始broadcasting tf frame需要一些时间。

问题二:出现”turtle2″ passed to lookupTransform argument target_frame does not exist.

这是由于在turtlesim完成构造turtle2并broadcasting tf2 frame之前listener就尝试计算变换。

2.6. Adding a frame

In the previous tutorials we recreated the turtle demo by adding a tf broadcaster and a tf listener. This tutorial will teach you how to add an extra frame to the tf tree. This is very similar to creating the tf broadcaster, and will show some of the power of tf.

6.1 Why to add frames?

For many tasks it is easier to think inside a local frame, e.g. it is easier to reason about a laser scan in a frame at the center of the laser scanner.

tf allows you to define a local frame for each sensor, link, etc in your system. And, tf will take care of all the extra frame transforms that are introduced.

6.2 Where to add frames?

TF builds up a tree structure of frames; it does not allow a closed loop in the frame structure. This means that a frame only has one single parent, but it can have multiple children.

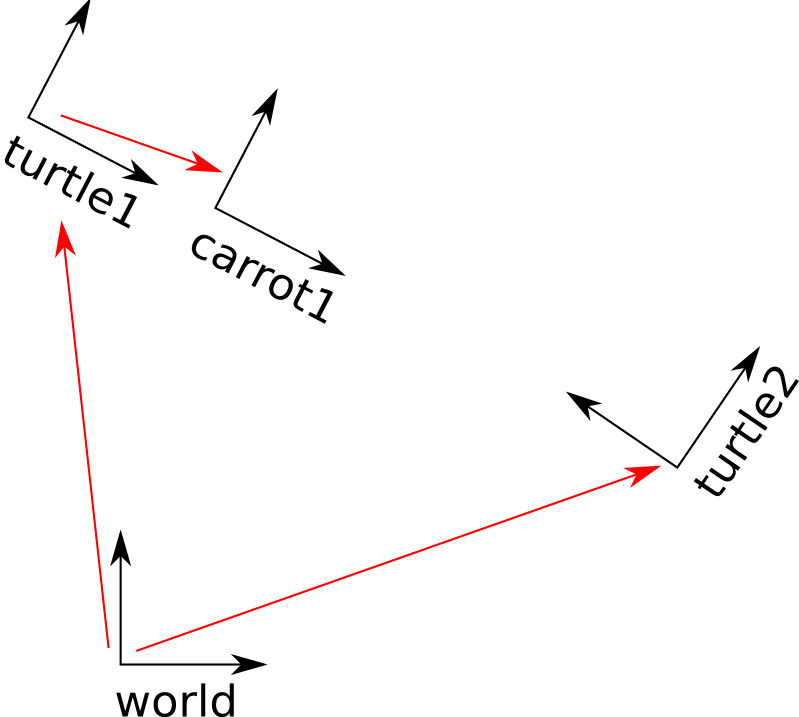

Currently our tf tree contains three frames: world, turtle1 and turtle2. The two turtles are children of world.

If we want to add a new frame to tf, one of the three existing frames needs to be the parent frame, and the new frame will become a child frame.

6.3 How to add a frame

以乌龟1为父框架添加一个carrot框架:

$ roscd learning_tf

$ nano src/frame_tf_broadcaster.cpp

#include

#include

int main(int argc, char** argv){

ros::init(argc, argv, “my_tf_broadcaster”);

ros::NodeHandle node;

tf::TransformBroadcaster br;

tf::Transform transform;

ros::Rate rate(10.0);

while (node.ok()){

transform.setOrigin( tf::Vector3(0.0, 2.0, 0.0) );

transform.setRotation( tf::Quaternion(0, 0, 0, 1) );

br.sendTransform(tf::StampedTransform(transform, ros::Time::now(), “turtle1”, “carrot1”));

//create a new transform, from the parent turtle1 to the new child carrot1.

//The carrot1 frame is 2 meters offset to the left from the turtle1 frame.

rate.sleep();

}

return 0;

}

$ vi CMakeLists.txt

add the following line on the bottom:

add_executable(frame_tf_broadcaster src/frame_tf_broadcaster.cpp)

target_link_libraries(frame_tf_broadcaster ${catkin_LIBRARIES})

$ cd ~/watkin_ws

$ catkin_make

$ vi launch/start_demo.launch

Simply merge the node block below inside the launch block::

…

测试

$ roslaunch learning_tf start_demo.launch

So, if you drive the first turtle around, you notice that the behavior didn’t change from the previous tutorial, even though we added a new frame. That’s because adding an extra frame does not affect the other frames, and our listener is still using the previously defined frames. So, let’s

6.4 Try to change the behavior of the listener.

如果把lister修改为

1 listener.lookupTransform(“/turtle2”, “/carrot1”, ros::Time(0), transform);

重新make,roslaunch,会有2米偏离。

6.5 发布移动框架

The extra frame we published in this tutorial is a fixed frame that doesn’t change over time in relation to the parent frame. However, if you want to publish a moving frame you can change the broadcaster to change over time. Let’s modify the /carrot1 frame to change relative to /turtle1 over time.

把发布框架的

transform.setOrigin( tf::Vector3(0.0, 2.0, 0.0) );

transform.setRotation( tf::Quaternion(0, 0, 0, 1) );

修改为

transform.setOrigin( tf::Vector3(2.0*sin(ros::Time::now().toSec()), 2.0*cos(ros::Time::now().toSec()), 0.0) );

transform.setRotation( tf::Quaternion(0, 0, 0, 1) );

重新make并launch,可见在追随一个运动框架。

2.7. About tf and time

In the previous tutorials we learned about how tf keeps track of a tree of coordinate frames. This tree changes over time, and tf stores a time snapshot for every transform (for up to 10 seconds by default).

Until now we used the lookupTransform() function to get access to the latest available transforms in that tf tree, without knowing at what time that transform was recorded.

This will teach you how to get a transform at a specific time.Go back to where we ended in the adding a frame tutorial.

$ roscd learning_tf

$ nano src/turtle_tf_listener.cpp

7.1 ros::Time::now()

go to the line:

listener.lookupTransform(“/turtle2”, “/carrot1”, ros::Time(0), transform);

First we make the second turtle follow the first turtle not the carrot:

listener.lookupTransform(“/turtle2”, “/turtle1”, ros::Time(0), transform);

So you can also see we specified a time equal to 0. For tf, time 0 means “the latest available” transform in the buffer.

Now, change this line to get the transform at the current time, “now()”:

listener.lookupTransform(“/turtle2”, “/turtle1”, ros::Time::now(), transform);

$ catkin_make

$ roslaunch learning_tf start_demo.launch

BUT lookupTransform() is failing, telling you repeatedly:

[ERROR] [1287871653.885277559]: You requested a transform that is 0.018 miliseconds in the past, but the most recent transform in the tf buffer is 7.681 miliseconds old. When trying to transform between /turtle1 and /turtle2.

Why is that?

Well, each listener has a buffer where it stores all the coordinate transforms coming from the different tf broadcasters. When a broadcaster sends out a transform, it takes some time before that transform gets into the buffer (usually a couple of milliseconds). So, when you request a frame transform at time “now”, you should wait a few milliseconds for that information to arrive.

7.2 Wait for transforms …

ros::Time now = ros::Time::now();

listener.waitForTransform(“/turtle2”, “/turtle1”, now, ros::Duration(3.0));

listener.lookupTransform(“/turtle2”, “/turtle1”, now, transform);

So waitForTransform() will actually block until the transform between the two turtles becomes available (this will usually take a few milliseconds), OR –if the transform does not become available– until the timeout has been reached.

Note: The use of ros::Time::now() is for this example. Usually this would be the timestamp of the data wishing to be transformed.

$ catkin_make

$ roslaunch learning_tf start_demo.launch

BUT, you might still see the error once (error msg might vary):

[ERROR] [1287872014.408401177]: You requested a transform that is 3.009 seconds in the past, but the tf buffer only has a history of 2.688 seconds. When trying to transform between /turtle1 and /turtle2.

Why it that?

This happens because turtle2 takes a non-zero time to spawn and start publishing tf frames. So the first time that you ask for now the /turtle2 frame may not have existed, when the transform is requested the transform may not exist yet and fails the first time. After the first transform all the transforms exist and the turtle behaves as expected.

7.3 CONCLUSION:

Now once again you should be able to simply drive around the first turtle using the arrow keys (make sure your terminal window is active, not your simulator window), and you’ll see the second turtle following the first turtle!

So, you noticed there is no noticeable difference in the turtle behavior. That is because the actual timing difference is only a few milliseconds. But then why did we make this change from Time(0) to now()? Just to teach you about the tf buffer and the time delays that are associated with it. For real tf use cases, it is often perfectly fine to use Time(0).

2.8. Time travel with tf

Above we discussed the basic concept of tf and time, now take this one step further, and expose one of the most powerful tf tricks.

$ roscd learning_tf

$ vi src/turtle_tf_listener.cpp

goto:

ros::Time now = ros::Time::now();

listener.waitForTransform(“/turtle2”, “/turtle1”, now, ros::Duration(1.0));

listener.lookupTransform(“/turtle2”, “/turtle1”, now, transform);

SO, instead of making the second turtle go to where the first turtle is now, make the second turtle go to where the first turtle was 5 seconds ago:

ros::Time past = ros::Time::now() – ros::Duration(5.0);

listener.waitForTransform(“/turtle2”, “/turtle1”, past, ros::Duration(1.0));

listener.lookupTransform(“/turtle2”, “/turtle1”, past, transform);

What would you expect to see? Definitely during the first 5 seconds the second turtle would not know where to go, because we do not yet have a 5 second history of the first turtle. But what after these 5 seconds? Let’s just give it a try:

$ catkin_make

$ roslaunch learning_tf start_demo.launch

BUT, your turtle driving around uncontrollably like in this screenshot? So what is happening?

We asked tf, “What was the pose of /turtle1 5 seconds ago, relative to /turtle2 5 seconds ago?”. This means we are controlling the second turtle based on where it was 5 seconds ago as well as where the first turtle was 5 seconds ago.

What we really want to ask is, “What was the pose of /turtle1 5 seconds ago, relative to the current position of the /turtle2?”.

So how can we ask tf a question like that? This API gives us the power to say explicitly when each frame is transformed. This is what the code would look like:

ros::Time now = ros::Time::now();

ros::Time past = now – ros::Duration(5.0);

listener.waitForTransform(“/turtle2”, now, “/turtle1”, past, “/world”, ros::Duration(1.0));

listener.lookupTransform(“/turtle2”, now, “/turtle1”, past, “/world”, transform);

This is an advanced API!

2.9. debug a typical tf problem

Debugging a problem with tf can be very tricky. Many tf related problems are caused by users asking tf the wrong questions.

we need useful to debug tf problems:

Understand what you are asking tf.

Check the frames in the tf buffer.

Check the timestamps in the tf buffer.

9.1 begin

Before you can debug anything in tf, you need to understand what you are asking tf. You need to know:

-The names of the two frames you are trying to transform between, and

-The time at which to transform.

9.2 Checking the Frames

So now that you know the names of the two frames you want to transform between, let’s check if tf knows about them. The easiest way to find out is to call:

$ rosrun tf tf_echo frameA frameB

if ok you should get output that looks like this:

At time 1254169758.447

– Translation: [5.556, 5.556, 0.000]

– Rotation: in Quaternion [0.000, 0.000, 0.000, 1.000]

in RPY [0.000, -0.000, 0.000]

At time 1254169759.134

– Translation: [5.556, 5.556, 0.000]

– Rotation: in Quaternion [0.000, 0.000, 0.000, 1.000]

in RPY [0.000, -0.000, 0.000]

9.3 Checking the Timestamp

The most tricky problems in tf are often caused by timestamps. Let’s first look at the timing information of the specific transform you are looking for:

$ rosrun tf tf_monitor frameA frameB

important is this:

RESULTS: for /frameA to /frameB

Chain currently is: /frameB -> /frameA

Net delay avg = 0.007317: max = 0.04489

9.4 Starting a demo application which has a number of problems.

$ roslaunch turtle_tf start_debug_demo.launch

The following error message:

[ERROR] 1254263539.785016000: Frame id /turtle3 does not exist! When trying to transform between /turtle1 and /turtle3.

The next problem:

[ERROR] 1254264620.183142000: You requested a transform that is 0.116 miliseconds in the past,

but the most recent transform in the tf buffer is 3.565 miliseconds old.

When trying to transform between /turtle1 and /turtle2.

TRY:

$ rosrun tf tf_monitor turtle2 turtle1

RESULTS: for /turtle2 to /turtle1

Chain currently is: /turtle1 -> /turtle2

Net delay avg = 0.008562: max = 0.05632

Frames:

Broadcasters:

Node: /broadcaster1 40.01641 Hz, Average Delay: 0.0001178 Max Delay: 0.000528

Node: /broadcaster2 40.01641 Hz, Average Delay: 0.0001258 Max Delay: 0.000309

The key part here is the delay for the chain from turtle2 to turtle1. The output shows there is an average delay of 8 milliseconds. This means that tf can only transform between the turtles after 8 milliseconds are passed. So, if we would be asking tf for the transformation between the turtles 8 milliseconds ago instead of “now”, tf would be able to give us an answer sometimes. Let’s test this quickly by changing to:

listener.lookupTransform(“/turtle2”, “/turtle1”, ros::Time::now()-ros::Duration(0.1), transform);

2.10. Using sensor messages with tf

how to use sensor data with tf? Some real-world examples of sensor data are: cameras, both mono and stereo , and/or, laser scans.

Suppose, a new turtle named turtle3 is created and it doesn’t have good odometry, but there is an overhead camera tracking its position and publishing it as a geometry_msgs/PointStamped message in relation to the world frame. Turtle 1 wants to know where turtle3 is compared to itself.

To do this, turtle1 must listen to the topic where turtle3’s pose is being published, wait until transforms into the desired frame are ready, and then do its operations.

To make this easier the tf::MessageFilter class is very useful. The tf::MessageFilter will take a subscription to any ros Message with a Header and cache it until it is possible to transform it into the target frame.

Setup:

$ roslaunch turtle_tf turtle_tf_sensor.launch

This will bring up turtle 1 to drive , and turtle3 moving on its own.

There is a topic turtle_pose_stamped with a geometry_msgs/PoseStamped data type stating turtle3’s position in the world frame.

To get the streaming data in the frame of turtle1 reliably we will use the following code:

2.11. Setting up your robot using tf

Many ROS packages require the transform tree of a robot to be published using the tf software library.

At an abstract level, a transform tree defines offsets in terms of both translation and rotation between different coordinate frames.

11.1 Transform Configuration

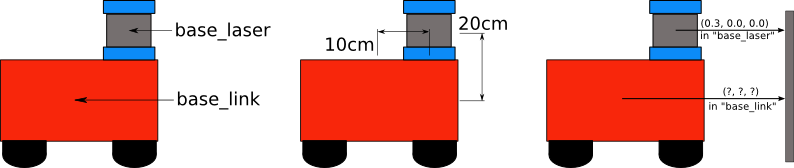

** example of a simple robot that has a mobile base with a single laser mounted on top of it. **

In referring to the robot let’s define two coordinate frames: one corresponding to the center point of the base of the robot , one for the center point of the laser that is mounted on top of the base.

We’ll call the coordinate frame attached to the mobile base “base_link” , and , call the coordinate frame attached to the laser “base_laser”.

Let’s assume that we have some laser data in the form of distances from the laser’s center point. it means we have some data in the “base_laser” coordinate frame. We want to use it to help the mobile base avoid obstacles in the world.

To do this, we need a way of transforming the laser scan we’ve received from the “base_laser” frame to the “base_link” frame. It means we need to define a relationship between the “base_laser” and “base_link” coordinate frames..

Assume we know that the laser is mounted 10cm forward and 20cm above the center point of the mobile base.

This gives us a translational offset that relates the “base_link” frame to the “base_laser” frame.

We know that to get data from the “base_link” frame to the “base_laser” frame we must apply a translation of (x: 0.1m, y: 0.0m, z: 0.2m), and to get data from the “base_laser” frame to the “base_link” frame we must apply the opposite translation (x: -0.1m, y: 0.0m, z: -0.20m).

We’ll define the relationship between “base_link” and “base_laser” once using tf and let it manage the transformation between the two coordinate frames for us rather than we do this works.

To define and store the relationship between the “base_link” and “base_laser” frames using tf, we need to add them to a transform tree. Each ‘node’ in the transform tree corresponds to a coordinate frame and each ‘edge’ corresponds to the transform that needs to be applied to move from the current node to its child.

To create a transform tree for our simple example, we’ll create two nodes, one for the “base_link” coordinate frame and one for the “base_laser” coordinate frame. To create the edge between them, we first need to decide which node will be the parent and which will be the child. This distinction is important because tf assumes that all transforms move from parent to child. Let’s choose the “base_link” coordinate frame as the parent. This means the transform associated with the edge connecting “base_link” and “base_laser” should be (x: 0.1m, y: 0.0m, z: 0.2m).

For example beow shows that object in base-laser frame is (x: 0.3m, y: 0.0m, z: 0.0m) , and same object in base-link frame is (x: 0.4m, y: 0.0m, z: 0.2m).

11.2 Writing Code

Now, we’ve got to take the transform tree and create it with code. Suppose that we have the high level task described above of taking points in the “base_laser” frame and transforming them to the “base_link” frame.

The first thing we need to do is to create a node that will be responsible for publishing the transforms in our system.

Next, we’ll have to create a node to listen to the transform data published over ROS and apply it to transform a point.

We’ll start by creating a package for the source code to live in and we’ll give it a simple name like “robot_setup_tf” We’ll have dependencies on roscpp, tf, and geometry_msgs.

$ roscd

$ cd ../src

$ catkin_create_pkg robot_setup_tf roscpp tf geometry_msgs

11.3 Broadcasting a Transform

We need to create the node that will do the work of broadcasting the base_laser → base_link transform over ROS.

$ roscd robot_setup_tf

$ nano src/tf_broadcaster.cpp

#include

#include

int main(int argc, char** argv){

ros::init(argc, argv, “robot_tf_publisher”);

ros::NodeHandle n;

ros::Rate r(100);

tf::TransformBroadcaster broadcaster;

//create a TransformBroadcaster object to send base_link → base_laser transform

while(n.ok()){

broadcaster.sendTransform(

tf::StampedTransform(

tf::Transform(tf::Quaternion(0, 0, 0, 1), tf::Vector3(0.1, 0.0, 0.2)),

ros::Time::now(),”base_link”, “base_laser”));

r.sleep();

}

}

HINTS:

broadcaster.sendTransform(), Sending a transform with a TransformBroadcaster requires five arguments need 4 params:

PARAM1 = tf::StampedTransform( PARAM1=tf::Transform(tf::Quaternion(0, 0, 0, 1), tf::Vector3(0.1, 0.0, 0.2)),

PARAM2=ros::Time::now(),

PARAM3=”base_link”,

PARAM4=”base_laser”

PARAM1:First, we pass in the rotation transform, which is specified by a btQuaternion for any rotation that needs to occur between the two coordinate frames. In this case, we want to apply no rotation, so we send in a btQuaternion constructed from pitch, roll, and yaw values equal to zero. Second, a btVector3 for any translation that we’d like to apply. We do, however, want to apply a translation, so we create a btVector3 corresponding to the laser’s x offset of 10cm and z offset of 20cm from the robot base.

And, we need to give the transform being published a timestamp, we’ll just stamp it with ros::Time::now(). And, We need to pass the name of the parent node of the link we’re creating, in this case “base_link.”

And, we need to pass the name of the child node of the link we’re creating, in this case “base_laser.”

11.4 Using a Transform

write a node that will use that transform to take a point in the “base_laser” frame and transform it to a point in the “base_link” frame.

$ nano src/tf_listener.cpp

#include

#include

#include

//create a function that, given a TransformListener,

//takes a point in “base_laser” and transforms it to “base_link” frame.

//This function serve as callback for ros::Timer created in main() and will fire every second

void transformPoint(const tf::TransformListener& listener){

//create a demo point in base_laser frame that we’d transform to base_link frame

geometry_msgs::PointStamped laser_point;

laser_point.header.frame_id = “base_laser”;

//use the most recent transform available for our simple example

laser_point.header.stamp = ros::Time();

//just an arbitrary point in space

laser_point.point.x = 1.0;

laser_point.point.y = 0.2;

laser_point.point.z = 0.0;

try{

geometry_msgs::PointStamped base_point;

listener.transformPoint(“base_link”, laser_point, base_point);

//three arguments: name of frame we want to transform point to (“base_link” here),

//the point we’re transforming, and storage for the transformed point.

ROS_INFO(“base_laser: (%.2f, %.2f. %.2f) —–> base_link: (%.2f, %.2f, %.2f) at time %.2f”,

laser_point.point.x, laser_point.point.y, laser_point.point.z,

base_point.point.x, base_point.point.y, base_point.point.z, base_point.header.stamp.toSec());

}

catch(tf::TransformException& ex){

ROS_ERROR(“Received an exception trying to transform a point from “base_laser” to “base_link”: %s”, ex.what());

}

}

int main(int argc, char** argv){

ros::init(argc, argv, “robot_tf_listener”);

ros::NodeHandle n;

tf::TransformListener listener(ros::Duration(10));

//transform a point once every second

ros::Timer timer = n.createTimer(ros::Duration(1.0), boost::bind(&transformPoint, boost::ref(listener)));

ros::spin();

}

$ vi CMakeLists.txt

Add the following lines to the bottom of the file.

add_executable(tf_broadcaster998 src/tf_broadcaster.cpp)

add_executable(tf_listener998 src/tf_listener.cpp)

target_link_libraries(tf_broadcaster998 ${catkin_LIBRARIES})

target_link_libraries(tf_listener998 ${catkin_LIBRARIES})

$ cd ../..

$ catkin_make

11.6 test

$ rosrun robot_setup_tf tf_broadcaster998

$ rosrun robot_setup_tf tf_listener998

[ INFO] 1248138528.200823000: base_laser: (1.00, 0.20. 0.00) —–> base_link: (1.10, 0.20, 0.20) at time 1248138528.19

[ INFO] 1248138529.200820000: base_laser: (1.00, 0.20. 0.00) —–> base_link: (1.10, 0.20, 0.20) at time 1248138529.19

…

NEXT, we replace the demoed PointStamped point with sensor streams data that come over ROS.

2.12. Publishing Sensor Streams Data Over ROS

The next step is to replace the PointStamped we used for this example with sensor streams that come over ROS. sensor_msgs/LaserScan messages , and , sensor_msgs/PointCloud messages.

Publishing data correctly from sensors over ROS is important for Navigation. There are many sensors that can be used to provide information to the Navigation: lasers, cameras, sonar, infrared, bump sensors, etc. However, the current navigation only accepts sensor data published using either the sensor_msgs/LaserScan Message type or the sensor_msgs/PointCloud Message type.

12.1 ROS Message Headers

Both the sensor_msgs/LaserScan and sensor_msgs/PointCloud message types, like many other messages sent over ROS, contain tf frame and time dependent information. To standardize how this information is sent, the Header message type is used as a field in all such messages.

The three fields in the Header type are shown below:

#Standard metadata for higher-level flow data types

#sequence ID: consecutively increasing ID

uint32 seq

#Two-integer timestamp that is expressed as:

# * stamp.secs: seconds (stamp_secs) since epoch

# * stamp.nsecs: nanoseconds since stamp_secs

# time-handling sugar is provided by the client library

time stamp

#Frame this data is associated with

# 0: no frame

# 1: global frame

string frame_id

* The seq field corresponds to an identifier that automatically increases as Messages are sent from a given publisher.

* The stamp field stores time information associated with data in a Message. In the case of a laser scan the stamp might correspond to the time at which the scan was taken.

* The frame_id field stores tf frame information associated with data in a message. In the case of a laser scan, this would be set to the frame in which the scan was taken.

12.2 Publishing LaserScans over ROS

a) LaserScan Message

For robots with laser scanners, ROS provides a special Message type in the sensor_msgs package called LaserScan to hold information about a given scan. Before we talk about how to generate and publish these messages, let’s take a look at the message specification itself:

Header header

float32 angle_min # start angle of the scan [rad]

float32 angle_max # end angle of the scan [rad]

float32 angle_increment # angular distance between measurements [rad]

float32 time_increment # time between measurements [seconds]

float32 scan_time # time between scans [seconds]

float32 range_min # minimum range value [m]

float32 range_max # maximum range value [m]

float32[] ranges # range data [m] (Note: values < range_min or > range_max should be discarded)

float32[] intensities # intensity data [device-specific units]

b) Code to Publish a LaserScan Message

Publishing a LaserScan message over ROS is fairly straightforward.

#include

#include

int main(int argc, char** argv){

ros::init(argc, argv, “laser_scan_publisher”);

ros::NodeHandle n;

ros::Publisher scan_pub = n.advertise(“scan”, 50);

unsigned int num_readings = 100;

double laser_frequency = 40;

double ranges[num_readings];

double intensities[num_readings];

int count = 0;

ros::Rate r(1.0);

while(n.ok()){

//generate some fake data for our laser scan

for(unsigned int i = 0; i < num_readings; ++i){ ranges[i] = count; intensities[i] = 100 + count; } ros::Time scan_time = ros::Time::now(); //populate the LaserScan message sensor_msgs::LaserScan scan; scan.header.stamp = scan_time; scan.header.frame_id = “laser_frame”; scan.angle_min = -1.57; scan.angle_max = 1.57; scan.angle_increment = 3.14 / num_readings; scan.time_increment = (1 / laser_frequency) / (num_readings); scan.range_min = 0.0; scan.range_max = 100.0; scan.ranges.resize(num_readings); scan.intensities.resize(num_readings); for(unsigned int i = 0; i < num_readings; ++i){ scan.ranges[i] = ranges[i]; scan.intensities[i] = intensities[i]; } scan_pub.publish(scan); ++count; r.sleep(); } } 12.3 Publishing PointClouds over ROS For storing and sharing data about a number of points in the world, ROS provides a sensor_msgs/PointCloud message. This message, as shown below, is meant to support arrays of points in three dimensions along with any associated data stored as a channel. For example, a PointCloud could be sent over the wire with an “intensity” channel that holds information about the intensity value of each point in the cloud. #This message holds a collection of 3d points, plus optional additional information about each point. #Each Point32 should be interpreted as a 3d point in the frame given in the header Header header geometry_msgs/Point32[] points #Array of 3d points ChannelFloat32[] channels #Each channel should have the same number of elements as points array, and the data in each channel should correspond 1:1 with each point a) Code to Publish a PointCloud Message #include

#include

int main(int argc, char** argv){

ros::init(argc, argv, “point_cloud_publisher”);

ros::NodeHandle n;

ros::Publisher cloud_pub = n.advertise(“cloud”, 50);

unsigned int num_points = 100;

int count = 0;

ros::Rate r(1.0);

while(n.ok()){

sensor_msgs::PointCloud cloud;

cloud.header.stamp = ros::Time::now();

cloud.header.frame_id = “sensor_frame”;

cloud.points.resize(num_points);

//we’ll also add an intensity channel to the cloud

cloud.channels.resize(1);

cloud.channels[0].name = “intensities”;

cloud.channels[0].values.resize(num_points);

//generate some fake data for our point cloud

for(unsigned int i = 0; i < num_points; ++i){ cloud.points[i].x = 1 + count; cloud.points[i].y = 2 + count; cloud.points[i].z = 3 + count; cloud.channels[0].values[i] = 100 + count; } cloud_pub.publish(cloud); ++count; r.sleep(); } } 13. Using robot state publisher When you are working with a robot that has many relevant frames, it becomes quite a task to publish them all to tf. The robot state publisher is a tool that will do this job for you. It helps you to broadcast the state of your robot to the tf transform library. You can use the robot state publisher as a standalone ROS node , or , as a library. 13.1 Running as a ROS node The easiest way to run the robot state publisher is as a node. For normal users, this is the recommended usage. You need two things to run the robot state publisher: * A urdf xml robot description loaded on the Parameter Server. * A source that publishes the joint positions as a sensor_msgs/JointState. robot_state_publisher uses the URDF specified by the parameter robot_description and the joint positions from the topic joint_states to calculate the forward kinematics of the robot and publish the results via tf. that asy, state_publisher node Subscribed topics joint_states to joint position information , and , parameter robot_description to get urdf. 13.2 launch file Once you have setup the XML robot description and a source for joint position information, simply create a launch file like this:

13.3 Running as a library

Advanced users can also run the robot state publisher as a library, from within their own C++ code. After you include the header:

#include

Every time you want to publish the state of your robot, you call the publishTransforms functions:

// publish moving joints

void publishTransforms(const std::map<std::string, double=””>& joint_positions,

const ros::Time& time);

//The first argument is a map with joint names and joint positions,

//the second argument is the time at which the joint positions were recorded.</std::string,>

// publish fixed joints

void publishFixedTransforms();

ALSO, anvanced may be using urdf with robot_state_publisher.

14 Using urdf with robot_state_publisher

a full example of a robot model with URDF that uses robot_state_publisher.

First, we create the URDF model with all the necessary parts.

Then we write a node which publishes the JointState and transforms.

Finally, we run all the parts together.

14.1 Create the URDF File

Here is the URDF file for a 7-link model roughly approximating R2-D2.

Save the following link to your computer: model.xml

14.2 Publishing the State

Now we need a method for specifying what state the robot is in. To do this, we must specify all three joints and the overall odometry.

Create a package:

$ roscd

$ cd ..

$ catkin_create_pkg r2d2 roscpp rospy tf sensor_msgs std_msgs

$ nano src/state_publisher.cpp

#include

#include

#include

#include

int main(int argc, char** argv) {

ros::init(argc, argv, “state_publisher”);

ros::NodeHandle n;

ros::Publisher joint_pub = n.advertise(“joint_states”, 1);

tf::TransformBroadcaster broadcaster;

ros::Rate loop_rate(30);

const double degree = M_PI/180;

// robot state

double tilt = 0, tinc = degree, swivel=0, angle=0, height=0, hinc=0.005;

// message declarations

geometry_msgs::TransformStamped odom_trans;

sensor_msgs::JointState joint_state;

odom_trans.header.frame_id = “odom”;

odom_trans.child_frame_id = “axis”;

while (ros::ok()) {

//update joint_state

joint_state.header.stamp = ros::Time::now();

joint_state.name.resize(3);

joint_state.position.resize(3);

joint_state.name[0] =”swivel”;

joint_state.position[0] = swivel;

joint_state.name[1] =”tilt”;

joint_state.position[1] = tilt;

joint_state.name[2] =”periscope”;

joint_state.position[2] = height;

// update transform

// (moving in a circle with radius=2)

odom_trans.header.stamp = ros::Time::now();

odom_trans.transform.translation.x = cos(angle)*2;

odom_trans.transform.translation.y = sin(angle)*2;

odom_trans.transform.translation.z = .7;

odom_trans.transform.rotation = tf::createQuaternionMsgFromYaw(angle+M_PI/2);

//send the joint state and transform

joint_pub.publish(joint_state);

broadcaster.sendTransform(odom_trans);

// Create new robot state

tilt += tinc;

if (tilt<-.5 || tilt>0) tinc *= -1;

height += hinc;

if (height>.2 || height<0) hinc *= -1; swivel += degree; angle += degree/4; // This will adjust as needed per iteration loop_rate.sleep(); } return 0; } $ vi CMakeLists.txt Make sure to add the tf dependency in addition to the other dependencies: find_package(catkin REQUIRED COMPONENTS roscpp rospy std_msgs tf) Notice that roscpp is used to parse the code that we wrote and generate the state_publisher node. We also have to add the following to the end of the CMakelists.txt in order to generate the state_publisher node: include_directories(include ${catkin_INCLUDE_DIRS}) add_executable(state_publisher src/state_publisher.cpp) target_link_libraries(state_publisher ${catkin_LIBRARIES}) $ cd ../.. $ catkin_make 14.3 Launch File launch file assumes you are using the package name “r2d2” and node name “state_publisher” and you have saved this urdf to the “r2d2” package. $ vi launch/display.launch

TEST:

$ roslaunch r2d2 display.launch

$ rosrun rviz rviz

Choose odom as your fixed frame (under Global Options).

Then choose “Add” and add a ‘Robot Model’ Display and a ‘TF’ Display.

发表评论

Want to join the discussion?Feel free to contribute!